Tag: research

UC Berkeley celebrates Love Data Week with great talks and tips!

Kicking off #lovedata18 week @UCBerkeley wih a workshop about @Scopus API! @UCBIDS @UCBerkeleyLib pic.twitter.com/qnXbQp7a9j

— Yasmina Anwar (@yasmina_anwar) February 13, 2018

Last week, the University Library, the Berkeley Institute for Data Science (BIDS), the Research Data Management program were delighted to host Love Data Week (LDW) 2018 at UC Berkeley. Love Data Week is a nationwide campaign designed to raise awareness about data visualization, management, sharing, and preservation. The theme of this year’s campaign was data stories to discuss how data is being used in meaningful ways to shape the world around us.

At UC Berkeley, we hosted a series of events designed to help researchers, data specialists, and librarians to better address and plan for research data needs. The events covered issues related to collecting, managing, publishing, and visualizing data. The audiences gained hands-on experience with using APIs, learned about resources that the campus provides for managing and publishing research data, and engaged in discussions around researchers’ data needs at different stages of their research process.

Participants from many campus groups (e.g., LBNL, CSS-IT) were eager to continue the stimulating conversation around data management. Check out the full program and information about the presented topics.

Photographs by Yasmin AlNoamany for the University Library and BIDS.

LDW at UC Berkeley was kicked off by a walkthrough and demos about Scopus APIs (Application Programming Interface), was led by Eric Livingston of the publishing company, Elsevier. Elsevier provides a set of APIs that allow users to access the content of journals and books published by Elsevier.

In the first part of the session, Eric provided a quick introduction to APIs and an overview about Elsevier APIs. He illustrated the purposes of different APIs that Elsevier provides such as DirectScience APIs, SciVal API, Engineering Village API, Embase APIs, and Scopus APIs. As mentioned by Eric, anyone can get free access to Elsevier APIs, and the content published by Elsevier under Open Access licenses is fully available. Eric explained that Scopus APIs allow users to access curated abstracts and citation data from all scholarly journals indexed by Scopus, Elsevier’s abstract and citation database. He detailed multiple popular Scopus APIs such as Search API, Abstract Retrieval API, Citation Count API, Citation Overview API, and Serial Title API. Eric also overviewed the amount of data that Scopus database holds.

In the second half of the workshop, Eric explained how Scopus APIs work, how to get a key to Scopus APIs, and showed different authentication methods. He walked the group through live queries, showed them how to extract data from API and how to debug queries using the advanced search. He talked about the limitations of the APIs and provided tips and tricks for working with Scopus APIs.

Eric left the attendances with actionable and workable code and scripts to pull and retrieve data from Scopus APIs.

On the second day, we hosted a Data Stories and Visualization Panel, featuring Claudia von Vacano (D-Lab), Garret S. Christensen (BIDS and BITSS), Orianna DeMasi (Computer Science and BIDS), and Rita Lucarelli (Department of Near Eastern Studies). The talks and discussions centered upon how data is being used in creative and compelling ways to tell stories, in addition to rewards and challenges of supporting groundbreaking research when the underlying research data is restricted.

Claudia von Vacano, the Director of D-Lab, discussed the Online Hate Index (OHI), a joint initiative of the Anti-Defamation League’s (ADL) Center for Technology and Society that uses crowd-sourcing and machine learning to develop scalable detection of the growing amount of hate speech within social media. In its recently-completed initial phase, the project focused on training a model based on an unbiased dataset collected from Reddit. Claudia explained the process, from identifying the problem, defining hate speech, and establishing rules for human coding, through building, training, and deploying the machine learning model. Going forward, the project team plans to improve the accuracy of the model and extend it to include other social media platforms.

Next, Garret S. Christensen, BIDS and BITSS fellow, talked about his experience with research data. He started by providing a background about his research, then discussed the challenges he faced in collecting his research data. The main research questions that Garret investigated are: How are people responding to military deaths? Do large numbers of, or high-profile, deaths affect people’s decision to enlist in the military?

Garret discussed the challenges of obtaining and working with the Department of Defense data obtained through a Freedom of Information Act request for the purpose of researching war deaths and military recruitment. Despite all the challenges that Garret faced and the time he spent on getting the data, he succeeded in putting the data together into a public repository. Now the information on deaths in the US Military from January 1, 1990 to November 11, 2010 that was obtained through Freedom of Information Act request is available on dataverse. At the end, Garret showed that how deaths and recruits have a negative relationship.

Orianna DeMasi, a graduate student of Computer Science and BIDS Fellow, shared her story of working with human subjects data. The focus of Orianna’s research is on building tools to improve mental healthcare. Orianna framed her story about collecting and working with human subject data as a fairy tale story. She indicated that working with human data makes security and privacy essential. She has learned that it’s easy to get blocked “waiting for data” rather than advancing the project in parallel to collecting or accessing data. At the end, Orianna advised the attendees that “we need to keep our eyes on the big problems and data is only the start.”

Rita Lucarelli, Department of Near Eastern Studies discussed the Book of the Dead in 3D project, which shows how photogrammetry can help visualization and study of different sets of data within their own physical context. According to Rita, the “Book of the Dead in 3D” project aims in particular to create a database of “annotated” models of the ancient Egyptian coffins of the Hearst Museum, which is radically changing the scholarly approach and study of these inscribed objects, at the same time posing a challenge in relation to data sharing and the publication of the artifacts. Rita indicated that metadata is growing and digital data and digitization are challenging.

It was fascinating to hear about Egyptology and how to visualize 3D ancient objects!

We closed out LDW 2018 at UC Berkeley with a session about Research Data Management Planning and Publishing. In the session, Daniella Lowenberg (University of California Curation Center) started by discussing the reasons to manage, publish, and share research data on both practical and theoretical levels.

Daniella shared practical tips about why, where, and how to manage research data and prepare it for publishing. She discussed relevant data repositories that UC Berkeley and other entities offer. Daniela also illustrated how to make data reusable, and highlighted the importance of citing research data and how this maximizes the benefit of research.

At the end, Daniella presented a live demo on using Dash for publishing research data and encouraged UC Berkeley workshop participants to contact her with any question about data publishing. In a lively debate, researchers shared their experiences with Daniella about working with managing research data and highlighted what has worked and what has proved difficult.

We have received overwhelmingly positive feedback from the attendees. Attendees also expressed their interest in having similar workshops to understand the broader perspectives and skills needed to help researchers manage their data.

I would like to thank BIDS and the University Library for sponsoring the events.

—

Yasmin AlNoamany

Love Data Week 2018 at UC Berkeley

The University Library, Research IT, and Berkeley Institute for Data Science will host a series of events on February 12th-16th during the Love Data Week 2018. Love Data Week a nationwide campaign designed to raise awareness about data visualization, management, sharing, and preservation.

The University Library, Research IT, and Berkeley Institute for Data Science will host a series of events on February 12th-16th during the Love Data Week 2018. Love Data Week a nationwide campaign designed to raise awareness about data visualization, management, sharing, and preservation.

Please join us to learn about multiple data services that the campus provides and discover options for managing and publishing your data. Graduate students, researchers, librarians and data specialists are invited to attend these events to gain hands-on experience, learn about resources, and engage in discussion around researchers’ data needs at different stages in their research process.

To register for these events and find out more, please visit: http://guides.lib.berkeley.edu/ldw2018guide

Schedule:

Intro to Scopus APIs – Learn about working with APIs and how to use the Scopus APIs for text mining.

01:00 – 03:00 p.m., Tuesday, February 13, Doe Library, Room 190 (BIDS)

Refreshments will be provided.

Data stories and Visualization Panel – Learn how data is being used in creative and compelling ways to tell stories. Researchers across disciplines will talk about their successes and failures in dealing with data.

1:00 – 02:45 p.m., Wednesday, February 14, Doe Library, Room 190 (BIDS)

Refreshments will be provided.

Planning for & Publishing your Research Data – Learn why and how to manage and publish your research data as well as how to prepare a data management plan for your research project.

02:00 – 03:00 p.m., Thursday, February 15, Doe Library, Room 190 (BIDS)

Hope to see you there!

Great talks and fun at csv,conf,v3 and Carpentry Training

Day1 @CSVConference! This is the coolest conf I ever been to #csvconf pic.twitter.com/ao3poXMn81

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

On May 2 – 5 2017, I (Yasmin AlNoamany) was thrilled to attend the csv,conf,v3 2017 conference and the Software/Data Carpentry instructor training in Portland, Oregon, USA. It was a unique experience to attend and speak with many people who are passionate about data and open science.

The csv,conf,v3

The csv,conf is for data makers from academia, industry, journalism, government, and open source. We had amazing four keynotes by Mike Rostock, the creator of the D3.js (JavaScript library for visualization data), Angela Bassa, the Director of Data Science at iRobot, Heather Joseph, the Executive Director of SPARC, and Laurie Allen, the lead Digital Scholarship Group at the University of Pennsylvania Libraries. The conference had four parallel sessions and a series of workshops about data. Check out the full schedule from here.

.@ErinSBecker is talking @CSVConference about @datacarpentry & @swcarpentry workshops! #csvconf pic.twitter.com/beKOixMNrt

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

If you wanna change the culture, start early! @ProjectJupyter @openscience @CSVConference #csvconf pic.twitter.com/7OVMjMvVrc

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

"Speak out, step up, take actions" Great ending for an amazing keynote by @hjoseph @CSVConference #csvconf pic.twitter.com/O4Vogsfhe4

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

Fascinated by @yasmina_anwar's research on using web archives to automatically create histories for the next generation. #csvconf pic.twitter.com/sbI359n5Bk

— V Ikeshoji-Orlati (@vikeshojiorlati) May 3, 2017

I presented on the second day about generating stories from archived data, which entitled “Using Web Archives to Enrich the Live Web Experience Through Storytelling”. Check out the slides of my talk below.

I demonstrated the steps of the proposed framework, the Dark and Stormy Archives (DSA), in which, we identify, evaluate, and select candidate Web pages from archived collections that summarize the holdings of these collections, arrange them in chronological order, and then visualize these pages using tools that users already are familiar with, such as Storify. For more information about this work, check out this post.

https://twitter.com/HamdanAzhar/status/859851223515582464

The csv,conf deserved to won the conference of the year prize for bringing the CommaLlama. The Alpaca brought much joy and happiness to all conference attendees. It was fascinating to be in csv,conf 2017 to meet and hear from passionate people from everywhere about data.

#commaLlama is in the chapel 😃! This is amazing! #csvconf @CSVConference pic.twitter.com/cnQJzQ9L3h

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

#commaLlama with @CLIRnews fellows @vikeshojiorlati and @yasmina_anwar @CSVConference #csvconf pic.twitter.com/Mpfooy9ccP

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

#Trump 😒 😡vs #Clinton😭💕 #emoji #emojis #electionsemojis #csvconf pic.twitter.com/yYoUzm158H

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

#Data needs to be findable, accessible, interoperable, reusable! @rchampieux #csvconf pic.twitter.com/1VIQzHSIh4

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

Shout out for all @CSVConference organizers! We enjoyed all the talks, keynotes, #commaLlama #csvconf #data pic.twitter.com/BLjRCCRYdu

— Yasmina Anwar (@yasmina_anwar) May 4, 2017

After the conference, Max Odgen from the Dat Data Project gave us a great tour from the conference venue to South Portland. We had a great food from street food trucks at Portland, then we had a great time with adorable neighborhood cats!

https://twitter.com/yasmina_anwar/status/860017390968492032

Tha Carpentry Training

Congratulations, everyone! You did it! Portland 2017 cohort of @datacarpentry / @swcarpentry Instructors! @LibCarpentry #porttt pic.twitter.com/J3ceEzwOBQ

— Library Carpentry (@LibCarpentry) May 5, 2017

https://twitter.com/LibCarpentry/status/860571982479241216

The two days had a mix of lectures and hands-on exercises about learning philosophy and Carpentry teaching practices. It was a unique and fascinating experience to have. We had two energetic instructors, Tim Dennis and Belinda Weaver, who generated welcoming and collaborate environment for us. Check out the full schedule and lessons from here.

.@yasmina_anwar just convinced me to fall in love with RStudio from her live code teaching example at #porttt.

— Elaine Wong (@elthenerd) May 5, 2017

At the end, I would like to acknowledge the support I had from the California Digital Library and the committee of the csv,conf for giving me this amazing opportunity to attend and speak at the csv,conf and the Carpentry instructor training. I am looking forward to applying what I learned in upcoming Carpentry workshops at UC Berkeley.

–Yasmin

Great talks and tips in Love Your Data Week 2017

Opening out #LYD17 w/great discussion of #securing research data. Thanks to our experts speakers @UCBIDS @DH @DLabAtBerkeley @ucberkeleylib pic.twitter.com/DqvIKb9sDL

— Yasmina Anwar (@yasmina_anwar) February 14, 2017

This week, the University Library and the Research Data Management program were delighted to participate in the Love Your Data (LYD) Week campaign by hosting a series of workshops designed to help researchers, data specialists, and librarians to better address and plan for research data needs. The workshops covered issues related to managing, securing, publishing, and licensing data. Participants from many campus groups (e.g., LBNL, CSS-IT) were eager to continue the stimulating conversation around data management. Check out the full program and information about the presented topics.

Photographs by Yasmin AlNoamany for the University Library.

The first day of LYD week at UC Berkeley was kicked off by a discussion panel about Securing Research Data, featuring

Jon Stiles (D-Lab, Federal Statistical RDC), Jesse Rothstein (Public Policy and Economics, IRLE), Carl Mason (Demography). The discussion centered upon the rewards and challenges of supporting groundbreaking research when the underlying research data is sensitive or restricted. In a lively debate, various social science researchers detailed their experiences working with sensitive research data and highlighted what has worked and what has proved difficult.

At the end, Chris Hoffman, the Program Director of the Research Data Management program, described a campus-wide project about Securing Research Data. Hoffman said the goals of the project are to improve guidance for researchers, benchmark other institutions’ services, and assess the demand and make recommendations to campus. Hoffman asked the attendees for their input about the services that the campus provides.

On the second day, we hosted a workshop about the best practices for using Box and bDrive to manage documents, files and other digital assets by Rick Jaffe (Research IT) and Anna Sackmann (UC Berkeley Library). The workshop covered multiple issues about using Box and bDrive such as the key characteristics, and personal and collaborative use features and tools (including control permissions, special purpose accounts, pushing and retrieving files, and more). The workshop also covered the difference between the commercial and campus (enterprise) versions of Box and Drive. Check out the RDM Tools and Tips: Box and Drive presentation.

We closed out LYD Week 2017 at UC Berkeley with a workshop about Research Data Publishing and Licensing 101. In the workshop, Anna Sackmann and Rachael Samberg (UC Berkeley’s Scholarly Communication Officer) shared practical tips about why, where, and how to publish and license your research data. (You can also read Samberg & Sackmann’s related blog post about research data publishing and licensing.)

In the first part of the workshop, Anna Sackmann talked about reasons to publish and share research data on both practical and theoretical levels. She discussed relevant data repositories that UC Berkeley and other entities offer, and provided criteria for selecting a repository. Check out Anna Sackmann’s presentation about Data Publishing.

During the second part of the presentation, Rachael Samberg illustrated the importance of licensing data for reuse and how the agreements researchers enter into and copyright affects licensing rights and choices. She also distinguished between data attribution and licensing. Samberg mentioned that data licensing helps resolve ambiguity about permissions to use data sets and incentivizes others to reuse and cite data. At the end, Samberg explained how people can license their data and advised UC Berkeley workshop participants to contact her with any questions about data licensing.

Check out the slides from Rachael Samberg’s presentation about data licensing below.

The workshops received positive feedback from the attendees. Attendees also expressed their interest in having similar workshops to understand the broader perspectives and skills needed to help researchers manage their data.

—

Yasmin AlNoamany

Special thanks to Rachael Samberg for editing this post.

Survey about “Understanding researcher needs and values about software”

Software is as important as data when it comes to building upon existing scholarship. However, while there has been a small amount of research into how researchers find, adopt, and credit software, there is currently a lack of empirical data on how researchers use, share, and value software and computer code.

The UC Berkeley Library and the California Digital Library are investigating researchers perceptions, values, and behaviors around the software generated as part of the research process. If you are a researcher, we would appreciate if you could help us understand your current practices related to software and code by spending 10-15 minutes to complete our survey. We are aiming to collect responses from researchers across different disciplines. The answers of the survey will be collected anonymously.

Results from this survey will be used in the development of services to encourage and support the sharing of research software and to ensure the integrity and reproducibility of scholarly activity.

Take the survey now:

https://berkeley.qualtrics.com/jfe/form/SV_aXc6OrbCpg26wo5

The survey will be open until March 20th. If you have any question about the study or a problem accessing the survey, please contact yasminal@berkeley.edu or John.Borghi@ucop.edu.

—

Yasmin AlNoamany

Could I re-research my first research?

Last week, one of my teammates, at Old Dominion University, contacted me and asked if she could apply some of the techniques I adopted in the first paper I published during my Ph.D. She asked about the data and any scripts I had used to pre-process the data and implement the analysis. I directed her to where the data was saved along with a detailed explanation of the structure of the directories. It took me awhile to remember where I had saved the data and the scripts I had written for the analysis. At the time, I did not know about data management and the best practices to document my research.

I shared the scripts I generated for pre-processing the data with my colleague, but the information I gave her did not cover all the details regarding my workflow. There were many steps I had done manually for producing the input and the output to and from the pre-processing scripts. Luckily I had generated a separate document that had the steps of the experiments I conducted to generate the graphs and tables in the paper. The document contained details of the research process in the paper along with a clear explanation for the input and the output of each step. When we submit a scientific paper, we get reviews back after a couple of months. That was why I documented everything I had done, so that I could easily regenerate any aspect of my paper if I needed to make any future updates.

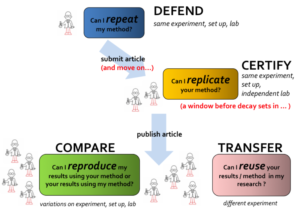

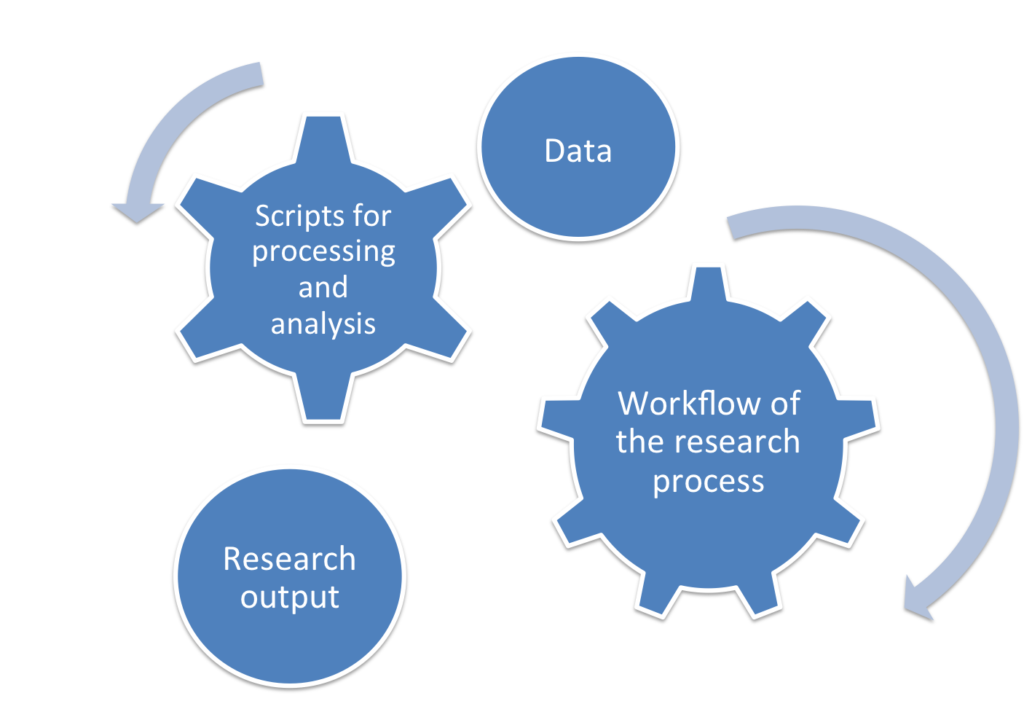

Documenting the workflow and the data of my research paper during the active phase of the research saved me the trouble of trying to remember all the steps I had taken if I needed to make future updates to my research paper. Now my colleague has all the entities of my first research paper: the dataset, the output paper of my research, the scripts that generated this output, and the workflow of the research process (i.e., the steps that were required to produce this output). She can now repeat the pre-processing for the data using my code in a few minutes.

Funding agencies have data management planning and data sharing mandates. Although this is important to scientific endeavors and research transparency, following good practices in managing research data and documenting the workflow of the research process is just as important. Reproducing the research is not only about storing data. It is also about the best practices to organize this data and document the experimental steps so that the data can be easily re-used and the research can be reproduced. Documenting the directory structure of the data in a file and attaching this file to the experiment directory would have saved me a lot of time. Furthermore, having a clear guidance for the workflow and documentation on how the code was built and run is an important step to making the research reproducible.

Funding agencies have data management planning and data sharing mandates. Although this is important to scientific endeavors and research transparency, following good practices in managing research data and documenting the workflow of the research process is just as important. Reproducing the research is not only about storing data. It is also about the best practices to organize this data and document the experimental steps so that the data can be easily re-used and the research can be reproduced. Documenting the directory structure of the data in a file and attaching this file to the experiment directory would have saved me a lot of time. Furthermore, having a clear guidance for the workflow and documentation on how the code was built and run is an important step to making the research reproducible.

While I was working on my paper, I adopted multiple well known techniques and algorithms for pre-processing the data. Unfortunately, I could not find any source codes that implemented them so I had to write new scripts for old techniques and algorithms. To advance the scientific research, researchers should be able to efficiently build upon past research and it should not be difficult for them to apply the basic tenets of scientific methods. My teammate is not supposed to re-implement the algorithms and the techniques I adopted in my research paper. It is time to change the culture of scientific computing to sustain and ensure the integrity of reproducibility.

Research Advisory Service

Undergrads, get help with that research project from experts. Make an appointment for a 30-minute session with our library research specialists. We can help you narrow your topic, find scholarly sources, and manage your citations among other things. Make your appointment online!

Appointments are from 11am-5pm, Monday, September 26 – Friday, December 2. Meet your librarian at the Reference Desk, 2nd floor, Doe Library.

Post submitted by Lynn Jones, Reference Coordinator

Workshop: Out of the Archives, Into Your Laptop

Presenters:

- Mary Elings, Head of Digital Collections, Bancroft Library

- Lynn Cunningham, Principal Digital Curator, Art History Visual Resources Center

- Jason Hosford, Senior Digital Curator, Art History Visual Resources Center

- Jamie Wittenberg, Research Data Management Service Design Analyst, Research IT

- Camille Villa, Digital Humanities Assistant, Research IT

Report on the research needs of historians

Ithaka S+R (part of ITHAKA, which brings us JSTOR and Portico) has published the first of many studies it plans to conduct on the changing research methods and practices of scholars in various disciplines. Supporting the Changing Research Practices of Historians examines the needs of historians and provides suggestions for how research support providers (including libraries) can better serve them.

From their web site:

“Our interviews of faculty and graduate students reveal history as a field in transition. It is characterized by a vast expansion of new sources, widely adopted research practices and communication mechanisms shaped by new technologies, and a small but growing subset of scholars utilizing new methodologies to ask questions or share findings in fresh, unique ways.

Research support providers such as libraries, archives, humanities centers, scholarly societies, and publishers – not to mention academic departments that are often at the front line of educating the next generation of scholars – need to innovate in support of these changes. This report provides context and a set of recommendations that we hope will help.