Author: melings

The Surprising Ink Recipe of an Ancient Scribe

By Leah Packard-Grams

Ancient History & Mediterranean Archaeology PhD student

Center for the Tebtunis Papyri

Writers in the modern world often have a favorite pen, a preferred color of ink, or quirky handwriting, and ancient writers were no different! Scribal habits are a topic of increasing interest among scholars who study the ancient Mediterranean, and UC Berkeley’s papyrus collection is full of possibilities for the topic that has set researchers abuzz.

UC Berkeley’s collection of papyri, housed in the Center for the Tebtunis Papyri (CTP) on the fourth floor of The Bancroft Library, was excavated from the sands of Egypt 123 years ago, but it has often been remarked that the collection is rich enough to provide work for generations of scholars. The papyri provide crucial insights for our knowledge of daily life in Egypt and can illuminate individual priests, artisans, and scribes in extraordinary detail. One specific group of papyri caught my attention when CTP Director Todd Hickey mentioned it in a graduate course a few years ago: the archive of a scribe who worked for a local record-office in the 70-60s BCE. Since his name has not yet been deciphered from the surviving papyri, scholars refer to him as “Scribe X”. His papyri were found in the crocodile mummies excavated in Tebtunis, Egypt, on behalf of UC Berkeley over a century ago.

1. (Left: A crocodile mummy on display in the Object Lessons exhibit, Spring 2020. Right: a reed pen from Tebtunis held in the Hearst Museum at UC Berkeley, object no. 6-21420.)

The Scribe X papyri have been examined by several scholars, and two of them have been published in full, while dozens of fragments remain to be edited.1 Previous researchers have found this archive to be unique: It not only represents the oldest collection of documents from a village record-office (grapheion in Greek), it also displays the earliest use of the reed pen (kalamos in Greek) to write the Demotic Egyptian script, which was traditionally written with a rush. The Egyptian scribes of this era did not use the reed pen for Demotic so far as we know, and it would be decades before the reed was used with regularity for the Demotic script. In other words, Scribe X had a favorite pen, and he used it even though it differentiated him from the other scribes around him. This prompted a question: If the scribe used an “atypical” writing instrument, was the ink he used likewise “atypical”?

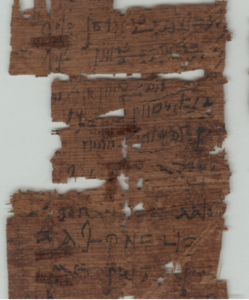

2. (A selection of lines from Scribe X’s account papyrus P.Tebt.UC 2489, displaying Demotic and Greek written on the same document, both written with the reed pen.)

Previous studies by a team of researchers in Europe have proved that there was a shift when scribes began to add copper sulfate to ancient inks of this period in the region of Tebtunis.2 This was a development from the earlier Egyptian ink recipe of gum arabic, soot, and water. In later periods, metallic elements such as lead and copper were intentionally added to the ink to make it more durable. Did this innovative scribe use a more recent ink recipe, or the traditional gum-soot-water recipe?

To find out, a chemical analysis using X-ray fluorescence spectrometry (XRF) was conducted in collaboration with UC Berkeley’s Archaeological Research Facility. One reason scholars favor the use of XRF for testing ancient objects is because it is nondestructive and extremely safe. A portable XRF spectrometer from the Archaeological Research Facility was brought to the Center for Tebtunis Papyri, where Dr Jesse Obert (a recent PhD from the Graduate Group in Ancient History and Mediterranean Archaeology) and I scanned multiple papyri from the Scribe X archive. The XRF spectrometer contains both an x-ray emitter with a precise 5 mm beam as well as a detector which identifies photons that are ejected from the atom when it is exposed to the x-rays. Different elements emit different photon signatures, and the detector “reads” the photon signatures to identify which elements are present in the atoms of the scanned area. We selected nine areas on one bilingual papyrus for scanning and included a number of uninscribed areas on the papyrus as a control. Several other papyrus fragments from the archive were also scanned in subsequent months.

3. (Dr Jesse Obert performing the initial scan with the portable XRF)

The results of the scans were surprising: The machine did not detect any metallic elements in the ink whatsoever. From this information, we can infer that Scribe X used the traditional ink recipe without any of the ink additives that were popular in the era! Such a finding was unforeseen because studies of comparable papyri did not attest to the use of this ink. At the same time, however, it is somewhat un-surprising, because it is well known that Ancient Egyptian traditions in art, religion, language, and culture endured even beyond Alexander the Great’s conquest of Egypt in 332 BCE. What this project has proven is that this ancient ink recipe was one of these enduring traditions, holding on longer than previously thought in the area of Tebtunis.

In April 2023, I presented this study in a poster for the annual meeting of the American Research Center in Egypt and received first place in the “Best Student Poster Competition.”3 More research on the archive is forthcoming (stay tuned for an upcoming feature in the Fall 2023 issue of Fiat Lux!). The project inspired me to get my own certification in XRF scanning, and my work with the archive has allowed me to become familiar with this scribe, his quirks, and his writing habits. The project and the prestigious award it has received are testaments to the open, collaborative attitude of UC Berkeley researchers working across disciplines and across the many research facilities and hubs across campus. I am extremely grateful to Dr Todd Hickey, Dr Jesse Obert, Dr Nicholas Tripcevich, and Directors of The Bancroft Library Charles Faulhaber and Kate Donovan, whose support and encouragement has enabled this project to come to fruition!

Notes

- The two papyri published thus far are edited by Parker 1972 and Muhs & Dieleman 2006. For assessment of the Demotic material, see Muhs 2009 and 2010. The Greek material was surveyed in Hoogendijk 2013.

- See the XRF ink study in Christiansen et al., 2017.

- See Packard-Grams 2023.

Sources

Christiansen, Thomas, et al. “Chemical Characterization of Black and Red Inks Inscribed on Ancient Egyptian Papyri: The Tebtunis Temple Library.” JAS Reports 17, 2017, pp. 208-219.

Hoogendijk, Francisca A.J.. “Greek Contracts Belonging to the Late Ptolemaic Tebtynis grapheion Archive?” in Das Fayyûm in Hellenismus und Kaiserzeit: Fallstudien zu multikulturellem Leben in der Antike, Carolin Arlt, Martin Andreas Stadler, and Ulrike Weinmann, eds., Wiesbaden: Harrassowitz Verlag, 2013, pp. 63-74.

Muhs, Brian. “The Berkeley Tebtunis Grapheion Archive,” in Actes du IXe Congrès des études démotiques, G. Widmer and D. Devauchelle, eds. BiEtud. 147. Cairo, 2009, pp. 243-252.

Muhs, Brian. “A Late Ptolemaic grapheion Archive in Berkeley,” in Proceedings of the Twenty-Fifth International Congress of Papyrology, Ann Arbor, 2007. American Studies in Papyrology, Ann Arbor, 2010, pp. 581-588.

Muhs, Brian, and Jacco Dieleman. “A Bilingual Account from Late Ptolemaic Tebtunis.” Zeitschrift für Ägyptische Sprache und Altertumskunde, vol. 133, 2006, pp. 56-65.

Packard-Grams, L. . “New Pen & Old Ink: XRF Analysis of a Unique Archive from 1st c. BCE Tebtunis,” X-Ray Fluorescence Reports: Archaeological Research Facility: UC Berkeley eScholarship Publications. 2023. https://escholarship.org/uc/item/25n2k5bn

Parker, R.A. “An Abstract of a Loan in Demotic from the Fayum.” Revue d’Égyptologie 24, 1972, pp. 129-136.

Social Problems During California’s Gold Rush Presaged Those We Face Today

by Louisa R. Brandt, an undergraduate student at UC Davis who spent her Summer 2015 break processing manuscripts at The Bancroft Library (originally posted to the “What’s New” blog on 2016/04/20)

Gold Rush-era letters, and others like them, are open for research at The Bancroft Library. Visit the library to conduct your own inquiries into the experiences of Californians living through past booms and busts.

As the Eastern United States met the West in the months and years following the 1848 gold discovery at Sutter’s Mill, California’s shores and gold-filled hills became riddled with problems the eager prospectors might have thought they had left behind: racial tension, concern over rainfall, economic disparities between neighbors, overcrowding and high rent. These sound familiar, don’t they?

At The Bancroft Library, recent acquisitions of letters sent from California during this widely-studied era illuminate through the voices of young men, both optimistic and pessimistic, how they saw this “land of opportunity” and tried to explain it to their relatives and friends back home. Although many of the letter writers in this collection are not famous, and some even unidentified, the aggregate of their experiences and descriptions paint an honest likeness of this not-so-foreign past.

The influx of prospective miners into California after January 1848 brought the racist stereotypes regarding the native population already common in the East to the forefront of western social interaction. Common claims of the day deriding the character of the Indians are seen in the December 25, 1852 letter by Abram Lanphear to his brother in New York as he calls the native population a “poor indolent lazy set of mortals” (BANC MSS 2015/12). Similar ideas drove the state military’s pursuit of revenge for the death of one sergeant during an altercation that had already left eight native people dead, as explained by Brigadier General Albert Maver Winn’s July 21, 1851 letter (BANC MSS 2015/16). Some men were not so blindly willing to believe the prejudice against Indians, and asserted that this hatred was sometimes used to cover for violence between whites. A Virginian miner in Butte County tells a friend of the “band of robbers who committed such wholesale & fiendish murders in our neighborhood” leaving victims with their “throats cut & arrows stuck all over them.” Despite the attempt to make the murders fit the Anglo perception of native warfare tactics, the author does believe that the Indians are blamed “probably falsly [sic]” (BANC MSS 2014/19). This method of exploiting the new immigrants’ fear and ingrained ideas of native people attacking was also used in San Francisco, as carpenter Christopher Toole notes that “the great trouble is with the indians but….the fault is not with indians it is with the whites” (BANC MSS 2015/19). That some people saw through efforts to make them believe in the evil of the native people mirrors today’s concerns over racial profiling.

Due to the need for water to wash gold from the gravel pulled from mines, and the fact that too much water made it impossible to reach the ore-rich hills, the amount of rain and river water was an important subject for men in the fields and in the cities. Miners writing from their claims often wished for a “wet winter” in order to have the rivers filled throughout the spring and summer months (BANC MSS 2014/12). When the rains came at an inopportune time, however, as was the case during the late winter of 1850, it created chaos as a dry February caused “such rush for the mines you never see in your life,” and the succeeding wet March sent the prospectors flooding back into cities (BANC MSS 2015/19). This dependence upon the rain is familiar to today’s Californians, who daily hear about the prolonged drought, and the possible flooding that could occur if the projected El Nino winter proves to be as strong as predicted. Even though many people do not rely solely on water for employment, the concern over water is unabated, and remains as common a topic of conversation today as it was during the Gold Rush.

Like most new careers, digging for gold held its fair share of monetary risk and depended on luck. A man identified only as “Charles” observed in 1850 that “99 out of 100” men get not a cent from mining (BANC MSS 2014/53). While some men struck it rich upon arrival, many were not so fortunate and spent months in squalid conditions. The different financial outcomes between men who dug for gold in the same areas proves just how random success was, and can be reflected in today’s business environment in which one new technology soars while a similar one fails. Frank William Bye, a miner who spent at least a decade in California’s gold fields, noted in 1852 that he “cleared over one hundred dollars per month.” This success did not allow him to forget that he was one of the few, as he follows by conceding that “hundreds of men equally as competent as myself [who] have been here all summer spend the last dollar” (BANC MSS 2014/58). An unidentified miner digging at the Yuba River in the Sacramento Valley perfectly describes how big the difference a short distance can be in his assessment of a claim located less than a mile from his where “there was a company of 20 men making 20 to $30 a day while all around there was many not making their board.” (BANC MSS 2015/3). What are now entrenched issues of economic disparity were already at play in the 1850s.

Rent and the cost of food in California are prime examples of too much demand for not enough land and supplies. Even though there were vastly fewer people in gold rush San Francisco than there are today, numerous letters marvel over the enormous sums asked for rent, which were far larger than anywhere else in the country. Architect Gordon Parker Cummings, whose contributions to California include San Francisco’s “Montgomery Block,” and Sacramento’s capitol building, complains to a friend in Pennsylvania that his two “3rd story rooms” in San Francisco rent for $900 a month, a cost that “would sound extravagant in Phill [but] it is a cheap one here” (BANC MSS 2014/35). The cost of basic foodstuffs also was drastically more expensive in California thanks especially to the difficulty of moving provisions from the port of San Francisco to the mines. In an 1853 letter to a friend, Charles Stone, a miner in Columbia, California (now a state park), notes at the beginning of the letter that flour costs $0.38 per pound, and by the end of the letter, written after a rainstorm, it was raised to $0.75 per pound (BANC MSS 2014/36).

Despite all of the unexpected hardships, California still held its charm for some newcomers who boasted of its “handsome buildings” and health benefits that were “worth all the Gold in California” (BANC MSS 2014/13; BANC MSS 2014/3). Even today, California is a beautiful place to live and generally has salubrious weather. So maybe there is more than one reason that millions continue to call it home.

– Louisa Brandt

Spring 2016 Born-Digital Archival Internship

The Bancroft Library University of California Berkeley

SPRING ARCHIVAL INTERNSHIP 2016

Come use your digital curation and metadata skills to help the Bancroft process born-digital archival collections!

Who is Eligible to Apply

Graduate students currently attending an ALA accredited library and information science program who have taken coursework in archival administration and/or digital libraries.

Please note: our apologies, but this internship does not meet SJSU iSchool credit requirements at this time.

Born-Digital Processing Internship Duties

The Born-Digital Processing Intern will be involved with all aspects of digital collections work, including inventory control of digital accessions, collection appraisal, processing, description, preservation, and provisioning for access. Under the supervision of the Digital Archivist, the intern will analyze the status of a born-digital manuscript or photograph collection and propose and carry out a processing plan to arrange and provide access to the collection. The intern will gain experience in appraisal, arrangement, and description of born-digital materials. She/he will use digital forensics software and hardware to work with disk images and execute processes to identify duplicate files and sensitive/confidential material. The intern will create an access copy of the collection and, if necessary, normalize access files to a standard format. The intern will generate an EAD-encoded finding aid in The Bancroft Library’s instance of ArchivesSpace for presentation on the Online Archive of California (OAC). All work will occur in the Bancroft Technical Services Department, and interns will attend relevant staff meetings.

Duration:

15 weeks (minimum 135 hours), January 28 – May 16, 2016 (dates are somewhat flexible). 1-2 days per week.

NOTE: The internship is not funded, however, it is possible to arrange for course credit for the internship. Interns will be responsible for living expenses related to the internship (housing, transportation, food, etc.).

Application Procedure:

The competitive selection process is based on an evaluation of the following application materials:

Cover letter & Resume

Current graduate school transcript (unofficial)

Photocopy of driver’s license (proof of residency if out-of-state school)

Letter of recommendation from a graduate school faculty member

Sample of the applicant’s academic writing or a completed finding aid

All application materials must be received by Friday, December 18, 2015, and either mailed to:

Mary Elings

Head of Digital Collections

The Bancroft Library

University of California Berkeley

Berkeley, CA 94720.

or emailed to melings [at] library.berkeley.edu, with “Born Digital Processing Internship” in the subject line.

Selected candidates will be notified of decisions by January 8, 2016.

It’s Electronic Records Day! Do You Know How to Save Your Stuff?

October 10, 2015 is Electronic Records Day – a perfect opportunity to remind ourselves of both the value and the vulnerability of electronic records. If you can’t remember the last time you backed up your digital files, read on for some quick tips on how to preserve your personal digital archive!

The Council of State Archivists (CoSA) established Electronic Records Day four years ago to raise awareness in our workplaces and communities about the critical importance of electronic records. While most of us depend daily on instant access to our email, documents, audio-visual files, and social media accounts, not everyone is aware of how fragile that access really is. Essential information can be easily lost if accounts are shut down, files become corrupted, or hard drives crash. The good news is there are steps you can take to ensure that your files are preserved for you to use, and for long-term historical research.

Check out CoSA’s Survival Strategies for Personal Digital Records (some great tips include “Focus on your most important files,” “Organize your files by giving individual documents descriptive file names,” and “Organize [digital images] as you create them”).

The Bancroft Library’s Digital Collections Unit is dedicated to preserving digital information of long-term significance. You can greatly contribute to these efforts by helping to manage your own electronic legacy, so please take a few minutes this weekend to back up your files (or organize some of those iPhone photos!) Together, we can take good care of our shared digital history.

Processing Notes: Digital Files From the Bruce Conner Papers

The following post includes processing notes from our summer 2015 intern Nissa Nack, a graduate student in the Master of Library and Information Science program at San Jose State University’s iSchool. Nissa successfully processed over 4.5 GB of data from the Bruce Conner papers and prepared the files for researcher access.

The digital files from the Bruce Conner papers consists of seven 700-MB CD-Rs (disks) containing images of news clippings, art show announcements, reviews and other memorabilia pertaining to the life and works of visual artist and filmmaker Bruce Conner. The digital files were originally created and then stored on the CD-Rs using an Apple (Mac) computer, type and age unknown. The total extent of the collection is measured at 4,517 MB.

Processing in Forensic Toolkit (FTK)

To begin processing this digital collection, a disk image of each CD was created and then imported into the Forensics Toolkit software (FTK) for file review and analysis. [Note: The Bancroft Library creates disk images of most computer media in the collections for long-term preservation.]

Unexpectedly, FTK displayed the contents of each disk in four separate file systems; HFS, HFS+, Joliet, and ISO 9660, with each file system containing an identical set of viewable files. Two of the systems, HFS and HFS+, also displayed discrete, unrenderable system files. We believe that the display of data in four separate systems may be due to the original files having been created on a Mac and then saved to a disk that could be read by both Apple and Windows machines. HFS and HFS+ are Apple file systems, with HFS+ being the successor to HFS. ISO 9660 was developed as a standard system to allow files on optical media to be read by either a Mac or a PC. Joliet is an extension of ISO 9660 that allows use of longer file names as well as Unicode characters.

With the presentation of a complete set of files duplicated under each file system, the question arose as to which set of files should be processed and ultimately used to provide access to the collection. Based on the structure of the disk file tree as displayed by FTK and evidence that a Mac had been used for file creation, it was initially decided to process files within the HFS+ system folders.

Processing of the files included a review and count of individual file types, review and description of file contents, and a search of the files for Personally Identifiable Information (PII). Renderable files identified during processing included Photoshop (.PSD), Microsoft Word (.DOC), .MP3, .TIFF, .JPEG, and .PICT. System files included DS_Store, rsrc, attr, and 8fs.

PII screening was conducted via pattern search for phone numbers, social security numbers, IP addresses, and selected keywords. FTK was able to identify a number of telephone numbers in this search; however, it also flagged groups of numbers within the system files as being potential PII, resulting in a substantial number of false hits.

After screening, the characteristics of the four file systems were again reviewed, and it was decided to use the Joliet file for export. Although the HFS+ file system was probably used to create and store the original files, it proved difficult to cleanly export this set of files from FTK. FTK “unpacked” the image files and displayed unrenderable resource, attribute and system files as discrete items. For example: for every .PSD file, a corresponding rsrc file could be found. The .PSD files can be opened, but the rsrc files cannot. The files were not “repacked” during export, and it is unknown as to how this might impact the images when transferred to another platform. The Joliet file system allowed us to export the images without separating any system-specific supporting files.

Issues with the length of file and path names were particularly felt during transfer of exported files to the Library network drive and, in some cases, after the succeeding step, file normalization.

File Normalization

After successful export, we began the task of file normalization whereby a copy of the master (original) files were used to produce access, and preservation surrogates in appropriate formats. Preservation files would ideally be in a non-compressed format that resists deterioration and/or obsolescence. Access surrogates are produced in formats that are easily accessible across a variety of platforms. .TIFF, .JPEG, .PICT, and .PSD files were normalized to the .TIFF format for preservation and the .JPEG format for access. Word documents were saved in the .PDF format for preservation and access, and .MP3 recordings were saved to .WAV format for preservation and a second .MP3 copy created for access.

Normalization Issues

Photoshop

Most Photoshop files converted to .JPEG and .TIFF format without incident. However, seven files could make the transfer to .TIFF but not to .JPEG. The affected files were all bitmap images of typewritten translations of reviews of Bruce Conner’s work. The original reviews appear to have been written for Spanish language newspapers.

To solve the issue, the bitmap images were converted to grayscale mode and from that point could be used to produce a .JPEG surrogate. The conversion to grayscale should not adversely impact file as the original image was of a black and white typewritten document, not of a color imbued object.

PICT

The .PICT files in this collection appeared in FTK and exported with a double extension (.pct.mac), and couldn’t be opened by either Mac or PC machines. Adobe Bridge was used to locate and select the files and then, using the “Batch rename” feature under the Tools menu, to create a duplicate file without the .mac in the file name.

The renamed .PCT files were retained as the master copies, and files with a duplicate extension were discarded.

Adobe Bridge was then used to create .TIFF and .JPEG images for the Preservation and Access files as in the case of .PSD files.

MP3 and WAV

We used the open-source Audacity software to save .MP3 files in the .WAV format, and to create an additional .MP3 surrogate. Unfortunately the Audacity software appeared to be able to process only one file type at a time. In other words, each original .MP3 file had to be individually located and exported as a .WAV file, which was then used to create the access .MP3 file. Because there were only six .MP3 files in this collection, the time to create the access and preservation files was less than an hour. However, if in the future a larger number of .MP3s need to be processed, an alternate method or workaround will need to be found.

File name and path length

The creator of this collection used long, descriptive file names with no real apparent overall naming scheme. This sometimes created a problem when transferring files, as the resulting path names of some files would exceed the allowable character limits and not allow the file to transfer. The “fix” was to eliminate words/characters while retaining as much information as possible from the original file name until a transfer could occur.

Processing Time

Processing time for this project, including time to create the processing plan and finding aid, was approximately 16 working days. However, a significant portion of the time, approximately ¼ to 1/3, was spent learning the processes and dealing with technological issues (such as file renaming or determining which file system to use).

BANCROFT SUMMER ARCHIVAL INTERNSHIP 2015

The Bancroft Library University of California Berkeley

SUMMER ARCHIVAL INTERNSHIP 2015

Who is Eligible to Apply

Graduate students currently attending an ALA accredited library and information science program who have taken coursework in archival administration and/or digital libraries.

Born-Digital Processing Internship Duties

The Born-Digital Processing Intern will be involved with all aspects of digital collections work, including inventory control of digital accessions, collection appraisal, processing, description, preservation, and provisioning for access. Under the supervision of the Digital Archivist, the intern will analyze the status of a born-digital manuscript or photograph collection and propose and carry out a processing plan to arrange and provide access to the collection. The intern will gain experience in appraisal, arrangement, and description of born-digital materials. She/he will use digital forensics software and hardware to work with disk images and execute processes to identify duplicate files and sensitive/confidential material. The intern will create an access copy of the collection and, if necessary, normalize access files to a standard format. The intern will generate an EAD-encoded finding aid in The Bancroft Library’s instance of ArchivesSpace for presentation on the Online Archive of California (OAC). Lastly, the intern will complete a full collection-level MARC catalog record for the collection using the University Library’s Millennium cataloging system. All work will occur in the Bancroft Technical Services Department, and interns will attend relevant staff meetings.

Duration:

6 weeks (minimum 120 hours), June 29 – August 7, 2015 (dates are somewhat flexible)

NOTE: The internship is not funded, however, it may be possible to arrange for course credit for the internship. Interns will be responsible for living expenses related to the internship (housing, transportation, food, etc.).

Application Procedure:

The competitive selection process is based on an evaluation of the following application materials:

Cover letter & Resume

Current graduate school transcript (unofficial)

Photocopy of driver’s license (proof of residency if out-of-state school)

Letter of recommendation from a graduate school faculty member

Sample of the applicant’s academic writing or a completed finding aid

All application materials must be postmarked on or before Friday, April 17, 2015 and either mailed to:

Mary Elings

Head of Digital Collections

The Bancroft Library

University of California Berkeley

Berkeley, CA 94720.

or emailed to melings [at] library.berkeley.edu, with “Born Digital Processing Internship” in the subject line.

Selected candidates will be notified of decisions by May 1, 2015.

Bancroft Library Processes First Born-Digital Collection

The Bancroft Library’s Digital Collections Unit recently finished a pilot project to process its first born-digital archival collection: the Ladies’ Relief Society records, 1999-2004. Based on earlier work and recommendations by the Bancroft Digital Curation Committee (Mary Elings, Amy Croft, Margo Padilla, Josh Schneider, and David Uhlich) we’re implementing best-practice procedures for acquiring, preserving, surveying, and describing born-digital files for discovery and use.

Read more about our efforts below, and check back soon for further updates on born-digital collections.

State of the Digital Archives: Processing Born-Digital Collections at the Bancroft Library (PDF)

Abstract:

This paper provides an overview of work currently being done in the Bancroft’s Digital Collections Unit to preserve, process, and provide access to born-digital collections. It includes background information about the Bancroft’s Born Digital Curation Program and discusses the development of workflows and strategies for processing born-digital content, including disk imaging, media inventories, hardware and software needs and support, arrangement, screening for sensitive content, and description. The paper also describes DCU’s pilot processing project of the born-digital files from the Ladies’ Relief Society records.

Bancroft to Explore Text Analysis as Aid in Analyzing, Processing, and Providing Access to Text-based Archival Collections

Mary W. Elings, Head of Digital Collections, The Bancroft Library

The Bancroft Library recently began testing a theory discussed at the Radcliffe Workshop on Technology & Archival Processing held at Harvard’s Radcliffe College in early April 2014. The theory suggested that archives can use text analysis tools and topic modelling — a type of statistical model for discovering the abstract “topics” that occur in a collection of documents — to analyze text-based archival collections in order to aid in analyzing, processing and describing collections, as well as improving access.

Helping us to test this theory, the Bancroft welcomed summer intern Janine Heiser from the UC Berkeley School of Information. Over the summer, supported by an ISchool Summer Non-profit Internship Grant, Ms. Heiser worked with digitized analog archival materials to test this theory, answer specific research questions, and define use cases that will help us determine if text analysis and topic modelling are viable technologies to aid us in our archival work. Based on her work over the summer, the Bancroft has recently awarded Ms. Heiser an Archival Technologies Fellowship for 2015 so that she can continue the work she began in the summer and further develop and test her work.

During her summer internship, Ms. Heiser created a web-based application, called “ArchExtract” that extracts topics and named entities (people, places, subjects, dates, etc.) from a given collection. This application implements and extends various natural language processing software tools such as MALLET and the Stanford Core NLP toolkit. To test and refine this web application, Ms. Heiser used collections with an existing catalog record and/or finding aid, namely the John Muir Correspondence collection, which was digitized in 2009.

For a given collection, an archivist can compare the topics and named entities that ArchExtract outputs to the topics found in the extant descriptive information, looking at the similarities and differences between the two in order to verify ArchExtract’s accuracy. After evaluating the accuracy, the ArchExtract application can be improved and/or refined.

Ms. Heiser also worked with collections that either have minimal description or no extant description in order to further explore this theory as we test the tool further. Working with Bancroft archivists, Ms. Heiser will determine if the web application is successful, where it falls short, and what the next steps might be in exploring this and other text analysis tools to aid in processing collections.

The hope is that automated text analysis will be a way for libraries and archives to use this technology to readily identify the major topics found in a collection, and potentially identify named entities found in the text, and their frequency, thus giving archivists a good understanding of the scope and content of a collection before it is processed. This could help in identifying processing priorities, funding opportunities, and ultimately helping users identify what is found in the collection.

Ms. Heiser is a second year masters’ student at the UC Berkeley School of Information where she is learning the theory and practice of storing, retrieving and analyzing digital information in a variety of contexts and is currently taking coursework in natural language processing with Marti Hearst. Prior to the ISchool, Ms. Heiser worked at several companies where she helped develop database systems and software for political parties, non-profits organizations, and an online music distributor. In her free time, she likes to go running and hiking around the bay area. Ms. Heiser was also one of our participants in the #HackFSM hackathon! She was awarded an ISchool Summer Non-profit Internship Grant to support her work at Bancroft this summer and has been awarded an Archival Technologies Fellowship at Bancroft for 2015.

#HackFSM Whitepaper is out: “#HackFSM: Bootstrapping a Library Hackathon in Eight Short Weeks”

The Bancroft Library and Research IT have just published a whitepaper on the #HackFSM hackathon: “#HackFSM: Bootstrapping a Library Hackathon in Eight Short Weeks.”

Abstract:

This white paper describes the process of organizing #HackFSM, a digital humanities hackathon around the Free Speech Movement digital archive, jointly organized by Research IT and The Bancroft Library at UC Berkeley. The paper includes numerous appendices and templates of use for organizations that wish to hold a similar event.

Publication download: ![]() HackFSM_bootstrapping_library_hackathon.pdf

HackFSM_bootstrapping_library_hackathon.pdf

Citation:

“#HackFSM: Bootstrapping a Library Hackathon in Eight Short Weeks”. Dombrowski, Quinn, Mary Elings, Steve Masover, and Camille Villa. “#HackFSM: Bootstrapping a Library Hackathon in Eight Short Weeks”. Research IT at Berk. Published October 3, 2014.

From: http://research-it.berkeley.edu/publications/hackfsm-bootstrapping-library-hackathon-eight-short-weeks

Digital Humanities and the Library

The topic of Digital Humanities (and Social Sciences Computing) has been a ubiquitous one at recent conferences, and this is no less true of The 53rd annual RBMS “Futures” Preconference in San Diego that took place June 19-22, 2012. The opening plenary, “Use,” on Digital Humanities featured two well-known practitioners in this field, Bethany Nowviskie of the University of Virginia and Matthew G. Kirschenbaum of the University of Maryland. For those of us who have been working in the digital library and digital collection realm for many years, Bethany’s discussion of the origins and long history of digital humanities was no surprise. Digitized library and special collection materials have been the source content used by digital humanists and digital librarians to carry out their work since the late 1980s. As a speaker at one of the ACH-ALLC programs in 1999, I was exposed to the digital tools and technologies being used to support research and scholarly exploration in what was then called linguistic and humanities computing. This work encompassed not only textual materials, but also still images, moving images, databases, and geographic materials; the stuff upon which current digital humanities and social sciences efforts are still based. What I learned then—and what the plenary speakers confirmed at this conference—is that this work has and continues to be collaborative and interdisciplinary. Long-established humanities computing centers at the Universities of Virginia and Maryland have supported this work for years, and they have had a natural partner in the library. Over the years, humanities computing centers have continued to evolve, often set within or supported by the library, and the field that is now known as Digital Humanities has gained prominence. The fact that this plenary opened the conference indicates that this topic is an important one to our community.

As scholars’ work is increasingly focused on digital materials, either digitized from physical collections or born-digital, we are seeing more demand for digital content and tools to carry out digital analysis, visualization, and computational processing, among other activities. Perhaps this is due to the maturation of the field of humanities computing, or the availability of more digital source content, or the rise of a new generation of digital native researchers. Whatever the reason, the role of the library (and the archive and the museum, for that matter) is central to this work. The library is an obvious source of digital materials for these scholars to work with, as was pointed out by both speakers.

Libraries can play a central role in providing access to this content through traditional activities, such as cataloging of digital materials, supporting digitization initiatives, and acquisition of digital content, as well as taking on new activities, such as supporting technology solutions (like digital tools), providing digital lab workspaces, and facilitating bulk access to data and content through mechanisms such as APIs. Just as we have built and facilitated access to analog research materials, we need to turn our attention to building and supporting use of digital research collections.

As Bethany stressed in her talk, we need more digital content for these scholars to work with and use. Digital humanities centers can partner with libraries to increase the scale of digitized materials in special collections or can give us tools to work with born-digital archives from pre-acquisition assessment through access to users, such as the tools being developed by Matthew’s “Bit Curator” project . By providing more content and taking the “magic” out of working with digital content, greater use can be facilitated. Unlike with physical materials, as Bethany pointed out, digital materials require use in order to remain viable, so the more we use digital materials, the longer they will last. She referred to this as “tactical preservation,” saying that our digital materials should be “bright keys,” in that the more they are used the brighter they become. By increasing use—making it easier to access and work with digital materials—we can ensure digital “futures” for our collections, whether physical or born-digital.

The collaborative nature of Digital Humanities projects — and centers — brings together researchers, technologists, tools, and content. These “places” may take various forms, but in almost all cases, the library and the historical content it collects and preserves plays a central role as the “stuff” of which digital humanities research and scholarly production is made. With its historical role in collecting and providing access to research materials, supporting teaching and learning, and long affinity with using technology for knowledge discovery, the library is well-positioned to support this work and become an even more active partner in the digital humanities and social sciences computing.

Mary W. Elings

Head of Digital Collections

(this text is excerpted and derived from an article written for RBM: A Journal of Rare Books, Manuscripts, and Cultural Heritage).