Author: Anna Sackmann

NEW DMPTool Launched!

A shiny new version of the DMPTool was launched at the end of February. The big change, beyond the new color scheme and layout, is that it is now a single source platform for all DMPs. It now incorporates the codebase from other instances of the program from all over the world, including: DMPTuuli (Finland), DMP Melbourne (Australia), DMP Assistant (Canada), DMPOnline (Europe), and many more! The move was made to combine all platforms into one in order to focus on best practices at an international level. Please learn more about the new instance by visiting the DMPTool Blog.

Love Data Week 2018!

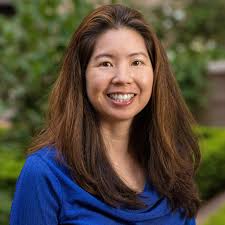

Alice Fan, MD: New Cancer Therapies & Women in Science

The last Nanoscale Science and Engineering (NSE) seminar of the semester is scheduled for Friday, December 1st from 2:00 – 3:00 in 180 Tan Hall. Alice Fan, from the Stanford Medical School, will be speaking on new nanoimmunoassays that enable the isolation and analysis of tumor cells. Following her talk, the Graduate Women in Engineering (GradSWE) will host a coffee hour from 3:30-4:30 in 242 Sutardja Dai Hall.

Engineering Academic Challenge!

The Elsevier Engineering Academic Challenge is back! The team-based challenge lasts for five weeks and began on September 18. Register here to get started and win prizes!

UPDATE: Elsevier Data Publishing Requirements

Last spring, we posted about data publishing requirements from Elsevier, Springer/Nature, and AAAS. At the time, Elsevier was the most lenient on their data publishing policies and used language that was suggestive and encouraging of data publishing. As of September 5th, 2017, that is no longer the case. Elsevier has signed on to the Transparency and Openness Guidelines (TOP) through the Center for Open Science. We talk and write a lot about transparency, openness, and sharing in science; however, there is a disconnect between the conversations and the daily workflows and practice of scientists. I was once told, after giving a workshop on data sharing, that I was an idealist trying to preach to realists. In order to close that gap, we need more publishers, like Elsevier, to make the ideal a reality, and enforce strict guidelines on data sharing and publishing.

Let’s take a look at the 5 new data sharing requirements, which will be implemented for 1800 of Elsevier’s titles:

Option A: you are encouraged to

- deposit your research data in a relevant repository

- cite this dataset in your article

Option B: you are encouraged to

- deposit your research data in a relevant repository

- cite this dataset in your article

- link this dataset in your article

- If you can’t do this, be prepared to explain why!

Option C: you are required to

- deposit your research data in a relevant repository

- cite this dataset in your article

- link this dataset in your article

- if you can’t do this, be prepared to explain why!

Option D: you are required to

- deposit your research data in a relevant repository

- cite this dataset in your article

- link this dataset in your article

Option E: you are required to

- deposit your research data in a relevant repository

- cite this dataset in your article

- link this dataset in your article

- peer reviewers will review the data prior to publication

The new Elsevier policy is similar in nature to Springer/Nature with their tiered system of requirements. It’s important to check with your individual journal to see which option it falls under. Ideally, you will always follow option E, where you make your data openly available, cited, linked, and provide the proper amount of metadata to go through the peer review process or be reused by another researcher.

If you have any questions about how to enrich the metadata of your dataset, or where to deposit your research data, please email researchdata@berkeley.edu!

Maps & More: Hamilton!

You’ve listened to the musical, now put some names to places with maps related to Alexander Hamilton’s life and exploits. This month’s Maps and More collections show-and-tell event is offered in coordination with the On the Same Page program. Featuring maps and atlases from the Earth Sciences & Map Library collection, this exhibit helps put some geographic context to key events in Hamilton, from his birth in the West Indies to his years in Philadelphia and New York and his deadly duel on the banks of the Hudson.

Data Practices and Publishing Workshop Series

On Tuesday, September 5th and Tuesday, September 12th, the Kresge Engineering Library and Research Data Management will be holding a series of two data management workshops designed for researchers who are in the midst navigating the research data lifecycle.

https://www.jisc.ac.uk/guides/research-data-management

During the first workshop, Efficient Research Data Practices, we’ll tear apart the above cycle and identify where each attendee currently falls in the data lifecycle. We’ll address pitfalls, tips, and tools for each step of the process that includes creating data management plans; setting up secure storage for the active data management phase; and how to prepare your data for publication while adhering to publisher and funder requirements.

The second workshop, Data Sharing: Publishing and Archiving, will take a deep dive into metadata creation and preparing data for publication and archiving. We’ll discuss why data publication is so important and we’ll identify individual publisher requirements for datasets. Daniella Lowenberg, formerly a publication manager for PLoS, and now the Research Data Specialist for the California Digital Library will be joining us.

Please register for the workshops by clicking on the below links and we look forward to seeing you!

Efficient Research Data Practices: September 5, 4:00 – 5:00, Kresge Engineering Library – 110MD Bechtel Engineering Center

Data Sharing: Publishing and Archiving: September 12, 4:00 – 5:00, Kresge Engineering Library – 110MD Bechtel Engineering Center

Overleaf and ShareLaTeX – Joining Forces!

- WYSIWYG editor

- publisher relationships for streamlined submission process

- integration with Mendeley (which we also have an institutional subscription to!)

- track changes feature

- robust real-time collaborative editing environment

- syncing to Github (ask about UC-Berkeley’s instance!)

GitHub: Archiving and Repositories

Github has become ubiquitous in the coding world and, with the advent of data science and computation in a slew of other disciplines, researchers are turning to the version control repository and hosting service. Google uses it, Microsoft uses it, and it’s on the list of the top 100 most popular sites on Earth. As a librarian and a member of the Research Data Management team, I often get the question: “Can I archive my code in my Github repository?” From the research data management perspective, the answer is a little sticky.

The terms “archive” and “repository” from GitHub mean something very different than their definitions from a research data management perspective. For example, in GitHub, a repository “contains all of the project files…and stores each file’s revision history.” Archiving content on GitHub means that your repository will stay on GiHub until you choose to remove it (or if GitHub receives a DMCA takedown notice, or if it violates their guidelines or terms of service).

For librarians, research data managers, and many funders and publishers, archiving content in a repository requires more stringent requirements. For example, Dryad, a commonly known repository, requires those who wish to remove content to go through a lengthy process proving that work has been infringed, or is not in compliance of the law (read more about removing content from Dryad here). Most importantly, Dryad (and many other repositories) take specific steps to preserve the research materials. For example:

* persistent identification

* fixity checks

* versioning

* multiple copies are kept in a variety of storage sites

A good repository provides persistent access to materials, enables discovery, and does not guarantee, but takes multiple steps to prevent data loss.

So, how can you continue to work efficiently through GitHub and adhere to good archival practices? GitHub links up with Zenodo, a repository based out of CERN. Data files are stored at CERN with another site in Budapest. All data is backed-up on a daily basis with regular fixity and authenticity checks. Zenodo assigns a digital object identifier to your code, making it persistently identifiable and discoverable. Check out this guide on Making Your Code Citable for more information on linking your GitHub with Zenodo. Zenodo isn’t perfect and there are a few limitations, including a max file size of 50 GB. Read more about their policies here.

UC-Berkeley has its own institutional version of GitHub, which means that Berkeley development teams and individual contributors can now have private repositories (and private, shared repositories within the Berkeley domain). If you’d like access, please email github@berkeley.edu. Additionally, we have institutional subscriptions to Overleaf and ShareLaTeX, both of which integrate with GitHub.

Please contact researchdata@berkeley.edu if you’d like more information about archiving your code on GitHub.

Elsevier, Springer Nature, and AAAS: Publisher Research Data Policies

- you may have entered into a research project mid-grant and are unaware of the data management plan that was included in the grant proposal

- the data management plan that was included in the grant application is not being followed

- you’re not sure how funder mandates line up with publisher requirements

- the language that publishers include about data sharing or publishing aren’t straight forward

- you know that you’re supposed to make your data public, but you don’t know where to do this or how to do this

- data sharing and data citation is encouraged

- data sharing and evidence of data sharing encouraged

- data sharing encouraged and statements of data availability required

- data sharing, evidence of data sharing and peer review of data required

- Research Data Policy Type 1 is the most lenient by encouraging data citation and sharing. I like to think of policy 1 as “data sharing lite,” because Springer Nature provides you with information about how to share and cite data, but you don’t necessarily have to. A few titles that fit into this category are: Academic Questions, Accreditation and Quality Assurance, Aesthetic Plastic Surgery, Contemporary Islam, and Journal of Happiness Studies.

- Research Data Policy Type 2 requires the authors to be more open with their relevant raw data by implying that the data will be available to any researcher who would like to reuse them for non-commercial purposes (barring confidentiality issues). This policy falls somewhere between “optional” and “mandatory.” The publisher is telling its journal policy 2 readers that this data is freely available for them to reuse, therefore warning, or preparing, the authors that they may be asked for their data. The easiest way to handle requests like this is to make is publicly available, with a citation and assigned digital object identifier in a repository. A few examples of type 2 journals include: Agronomy for Sustainable Development, BioEnergy Research, Brain Imaging and Behavior, and Journal of Geovisualization and Spatial Analysis

- Research Data Policy Type 3 is geared specifically for journals that publish research on the life sciences. When an author submits to policy 3 journals, they are strongly encouraged to deposit data in repositories. It is implied that all raw data is freely available (again, barring confidentiality issues) to any researcher who requests it. For policies 1 and 2, authors may deposit data in general repositories. However, for policy 3, researchers must deposit specific types of data in a list of prescribed repositories. For example, DNA and RNA sequencing data must be deposited in the NCBI Trace Archive or the NCBI Sequence Read Archive (SRA). A few examples of type 3 journals include: Journal of Hematology and Oncology, Nature Cell Biology, and Nature Chemistry.

- Research Data Policy Type 4 requires that all of the datasets for the paper’s conclusion must be available to reviewers and readers. The datasets have to be available in repositories prior to the peer review process (or be made available in supplementary material) and is conditional upon publication that data is in the appropriate repository. Examples of type 4 journals include BMC Biology, Genome Biology, and Retrovirology.