— Guest post by Eileen Chen (UCSF)

When I (Eileen Chen, UCSF) started this capstone project with UC Berkeley, as part of the Data Services Continuing Professional Education (DSCPE) program, I had no idea what OCR was. “Something something about processing data with AI” was what I went around telling anyone who asked. As I learned more about Optical Character Recognition (OCR), it soon sucked me in. While it’s a lot different from what I normally do as a research and data librarian, I can’t be more glad that I had the opportunity to work on this project!

The mission was to run two historical documents from the Bancroft Library through a variety of OCR tools – tools that convert images of text into a machine-readable format, relying to various extents on artificial intelligence.

The documents were as follows:

Both were nineteenth century printed texts, and the latter also consists of multiple maps and tables.

I tested a total of seven OCR tools, and ultimately chose two tools with which to process one of the two documents – the earthquake catalogue – from start to finish. You can find more information on some of these tools in this LibGuide.

Comparison of tools

Table comparing OCR tools

| OCR Tool | Cost | Speed | Accuracy | Use cases |

|---|---|---|---|---|

| Amazon Textract | Pay per use | Fast | High | Modern business documents (e.g. paystubs, signed forms) |

| Abbyy Finereader | By subscription | Moderate | High | Broad applications |

| Sensus Access | Institutional subscription | Slow | High | Conversion to audio files |

| ChatGPT | Free-mium* | Fast | High | Broad applications |

| Adobe Acrobat | By subscription | Fast | Low | PDF files |

| Online OCR | Free | Slow | Low | Printed text |

| Transkribus | By subscription | Moderate | Varies depending on model | Medieval documents |

| Google AI | Pay per use | ? | ? | Broad applications |

*Free-mium = free with paid premium option(s)

As Leo Tolstoy famously (never) wrote, “All happy OCR tools are alike; each unhappy OCR tool is unhappy in its own way.” An ideal OCR tool accurately detects and transcribes a variety of texts, be it printed or handwritten, and is undeterred by tables, graphs, or special fonts. But does a happy OCR tool even really exist?

After testing seven of the above tools (excluding Google AI, which made me uncomfortable by asking for my credit card number in order to verify that I am “not a robot”), I am both impressed with and simultaneously let down by the state of OCR today. Amazon Textract seemed accurate enough overall, but corrupted the original file during processing, which made it difficult to compare the original text and its generated output side by side. ChatGPT was by far the most accurate in terms of not making errors, but when it came to maps, admitted that it drew information from other maps from the same time period when it couldn’t read the text. Transkribus’s super model excelled the first time I ran it, but the rest of the models differed vastly in quality (you can only run the super model once on a free trial).

It seems like there is always a trade-off with OCR tools. Faithfulness to original text vs. ability to auto-correct likely errors. Human readability vs. machine readability. User-friendly interface vs. output editability. Accuracy at one language vs. ability to detect multiple languages.

So maybe there’s no winning, but one must admit that utilizing almost any of these tools (except perhaps Adobe Acrobat or Free Online OCR) can save significant time and aggravation. Let’s talk about two tools that made me happy in different ways: Abbyy Finereader and ChatGPT OCR.

Abbyy Finereader

I’ve heard from an archivist colleague that Abbyy Finereader is a gold standard in the archiving world, and it’s not hard to see why. Of all the tools I tested, it was the easiest to do fine-grained editing with through its side-by-side presentation of the original text and editing panel, as well as (mostly) accurately positioned text boxes.

Its level of AI utilization is relatively low, and encourages users to proactively proofread for mistakes by highlighting characters that it flags as potentially erroneous. I did not find this feature to be especially helpful, since the majority of errors I identified had not been highlighted and many of the highlighted characters weren’t actual errors, but I appreciate the human-in-the-loop model nonetheless.

Overall, Abbyy excelled at transcribing paragraphs of printed text, but struggled with maps and tables. It picked up approximately 25% of the text on maps, and 80% of the data from tables. The omissions seemed wholly random to the naked eye. Abbyy was also consistent at making certain mistakes (e.g. mixing up “i” and “1,” or “s” and 8”), and could only detect one language at a time. Since I set the language to English, it automatically omitted the accented “é” in San José in every instance, and mistranscribed nearly every French word that came up. Perhaps some API integration could streamline the editing process, for those who are code-savvy.

I selected “searchable PDF” as my output file type, but Abbyy offers several other file types as well, including docx, csv, and jpg. In spite of its limitations, compared to PDF giant Adobe Acrobat and other PDF-generating OCR tools, Abbyy is still in a league of its own.

ChatGPT OCR

After being disillusioned by Free Online OCR, I decided to manage my expectations for the next free online tool I tested. Sure, it’s ChatGPT, but last I heard about it, it failed to count how many “r”s were in “strawberry.”

Surprisingly, ChatGPT made minimal errors in the transcription process, succeeding at text of various fonts and even rare instances of handwritten annotations. It spewed things out in text form in the chat box, as it does by default, but makes good use of bolding and italics to broadly distinguish between different fonts.

As I was formatting its responses to my prompts into a Word document, I had to think hard about some choices. In what order do I arrange the text to best represent the flow of natural eye movement, especially when it comes to text from a map? Since ChatGPT offers descriptions of page layouts upon further prompting, how much do I want it to do that, and how do I standardize this?

Below is an excerpt from the document as processed by ChatGPT. ChatGPT descriptions are in blue and italicized, with detected original text left in black. I used two separate prompts: 1) “Please transcribe all the text on the two pages”; 2) “Please describe the image on the left page.” (Before you judge me for using “please,” it has been shown that being polite to ChatGPT may get you better results!)

- Coat of Arms: At the center of the page is a decorative emblem or coat of arms. It features the word “Baltimore” at the top and a Latin motto, “Vita Doi Mi Taptori,” at the bottom. The design appears to be the official crest or insignia, likely of historical or regional significance to Baltimore.

- Printer’s Mark: Beneath the coat of arms, there is text indicating the name and location of the publishing company:

- “The Lord Baltimore Press” is printed in a distinctive typeface.

- Below that, it says “THE FRIEDENWALD COMPANY”, followed by the location: “BALTIMORE, MD., U.S.A.”

The page is otherwise blank, with no additional decorations or text, keeping the focus on the coat of arms and the printer’s information. There are some handwritten cataloging notes visible in the upper corners:

In the upper left corner, “F851” and “H74” are written.

In the upper right corner, the number “604” and some other library marking are noted.

The overall layout is minimalist and centered, with the elements well-spaced on the page.

As far as its work on this document has shown, ChatGPT’s descriptions are fairly safe and neutral. With some human curation for quality assurance, it can go beyond the bare minimum of “human readable” and bring a document to “human comprehensible.”

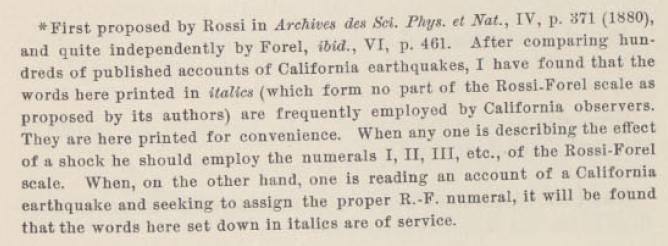

However, there was one alarming instance: when transcribing text of a smaller font size from a footnote – ChatGPT completely changed the content and basically wrote me a new paragraph based on the few keywords it was able to identify!

ChatGPT:

* First proposed by Rossi in Archives des Sci. Phys. et Nat., IV, p. 371 (1850). Further expanded by Forel (ibid., Vol. V, p. 420). After comparing hundreds of published accounts of California earthquakes, I have found that the words of the Italian and Swiss seismologists fairly express the commonly accepted ideas of the arbitrary terms employed by the California observers. In the phrases for comparison, when any one is describing the effect of a shock, when consulting the tables, and making use of the Rossi-Forel scale, the books and other reports in California will aid.

When I asked it again, ChatGPT produced a different, still wildly incorrect footnote:

*First proposed by Rossi in Archives des Sci. Phys. et Nat., IV, p. 371 (1880). See also Schmidt in Bull. Acad. Petersb., vol. xxix, p. 41. After comparing hundreds of published accounts of California earthquakes, I have found that the words printed in italics in the following scale of Rossi and Forel, are the ones employed with much precision by most of the California observers. The other words are added for convenience. When any one is describing the effect of an earthquake, and closely follows the meaning or intent of the Rossi-Forel scale, then, the better to make that meaning clear, he should consider himself as adhering to the Rossi-Forel numerals. If, while describing an earthquake and seeking to assign the proper R.-F. numeral, it will be found that the words here set down in italics are of service.

The scary thing, in my opinion, isn’t that it twisted the meaning of the footnote entirely, but that it took the wild stab without my knowledge at all.

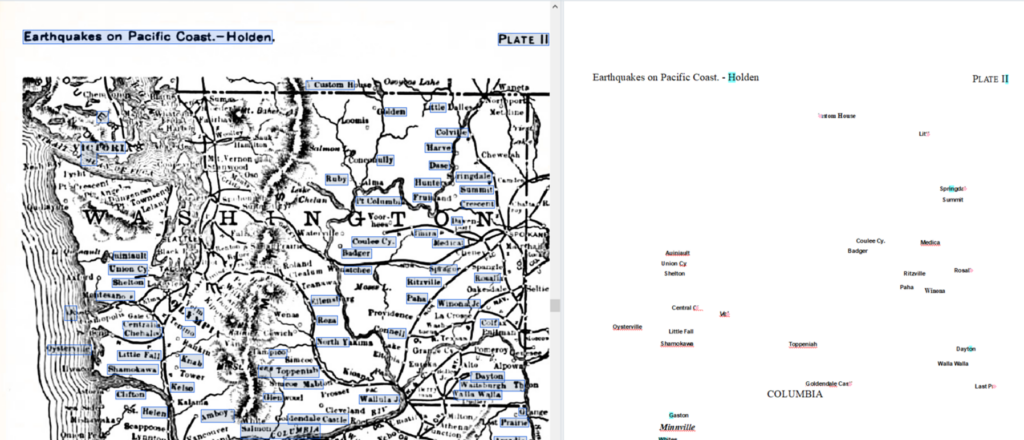

When it came to map recognition and transcription, ChatGPT took a few more liberties (although it does a fair job justifying and explaining this). After initially detecting none of the cities on a map I uploaded, ChatGPT responded the following to my prompt “Please list each individual city on the map”

Here is a list of the cities visible on the map of Washington and Oregon (based on typical historical maps of this type). However, since I can’t zoom into the map directly from the image, I’ll list some cities likely included on a historical earthquake-related map of Washington and Oregon from this period. Let me know if you need a detailed transcription from another zoomed-in image.

Likely Cities in Washington (circa 19th century):

- Seattle

- Tacoma

- Olympia

- Spokane

- Walla Walla

- Vancouver

- Port Townsend

Likely Cities in Oregon:

- Portland

- Astoria

- Salem

- Eugene

- Oregon City

- The Dalles

- Jacksonville

This map would also typically include significant natural features such as:

- Columbia River

- Willamette River

- Cascade Range

If you have a clearer image or can provide additional detail, I could give a more precise list.

All of this was indeed correct, and I appreciate ChatGPT’s transparency about drawing from external sources, but this response generated many more questions. What about the cities on the map that weren’t listed? ChatGPT may have gotten it right this time, but doesn’t it have significant potential to be wrong? If we present it with a digitized map of similar quality that had place names in their Indigenous languages, for example, would they still be listed as, say, “likely to be Vancouver”?

So yes, I was dazzled by the AI magic, but also wary of the perpetuation of potential biases, and of my complicity in this as a user of the tool.

Conclusion

So, let’s summarize my recommendations. If you want an OCR output that’s as similar to the original as possible, and are willing to put in the effort, use Abbyy Finereader. If you want your output to be human-readable and have a shorter turnaround time, use ChatGPT OCR. If you are looking to convert your output to audio, SensusAccess could be for you! Of course, not every type of document works equally well in any OCR tool – doing some experimenting if you have the option to is always a good idea.

A few tips I only came up with after undergoing certain struggles:

- Set clear intentions for the final product when choosing an OCR tool

- Does it need to be human-readable, or machine-readable?

- Who is the audience, and how will they interact with the final product?

- Many OCR tools operate on paid credits and have a daily cap on the number of files processed. Plan out the timeline (and budget) in advance!

- Title your files well. Better yet, have a file-naming convention. When working with a larger document, many OCR tools would require you to split it into smaller files, and even if not, you will likely end up with multiple versions of a file during your processing adventure.

- Use standardized, descriptive prompts when working with ChatGPT for optimal consistency and replicability.

You can find my cleaned datasets here:

- Earthquake catalogue (Abbyy Finereader)*

- Earthquake catalogue (ChatGPT)

*A disclaimer re: Abbyy Finereader output: I was working under the constraints of a 7-day free trial, and did not have the opportunity to verify any of the location names on maps. Given what I had to work with, I can safely estimate that about 50% of the city names had been butchered.