Tag: data

UC Berkeley celebrates Love Data Week with great talks and tips!

Kicking off #lovedata18 week @UCBerkeley wih a workshop about @Scopus API! @UCBIDS @UCBerkeleyLib pic.twitter.com/qnXbQp7a9j

— Yasmina Anwar (@yasmina_anwar) February 13, 2018

Last week, the University Library, the Berkeley Institute for Data Science (BIDS), the Research Data Management program were delighted to host Love Data Week (LDW) 2018 at UC Berkeley. Love Data Week is a nationwide campaign designed to raise awareness about data visualization, management, sharing, and preservation. The theme of this year’s campaign was data stories to discuss how data is being used in meaningful ways to shape the world around us.

At UC Berkeley, we hosted a series of events designed to help researchers, data specialists, and librarians to better address and plan for research data needs. The events covered issues related to collecting, managing, publishing, and visualizing data. The audiences gained hands-on experience with using APIs, learned about resources that the campus provides for managing and publishing research data, and engaged in discussions around researchers’ data needs at different stages of their research process.

Participants from many campus groups (e.g., LBNL, CSS-IT) were eager to continue the stimulating conversation around data management. Check out the full program and information about the presented topics.

Photographs by Yasmin AlNoamany for the University Library and BIDS.

LDW at UC Berkeley was kicked off by a walkthrough and demos about Scopus APIs (Application Programming Interface), was led by Eric Livingston of the publishing company, Elsevier. Elsevier provides a set of APIs that allow users to access the content of journals and books published by Elsevier.

In the first part of the session, Eric provided a quick introduction to APIs and an overview about Elsevier APIs. He illustrated the purposes of different APIs that Elsevier provides such as DirectScience APIs, SciVal API, Engineering Village API, Embase APIs, and Scopus APIs. As mentioned by Eric, anyone can get free access to Elsevier APIs, and the content published by Elsevier under Open Access licenses is fully available. Eric explained that Scopus APIs allow users to access curated abstracts and citation data from all scholarly journals indexed by Scopus, Elsevier’s abstract and citation database. He detailed multiple popular Scopus APIs such as Search API, Abstract Retrieval API, Citation Count API, Citation Overview API, and Serial Title API. Eric also overviewed the amount of data that Scopus database holds.

In the second half of the workshop, Eric explained how Scopus APIs work, how to get a key to Scopus APIs, and showed different authentication methods. He walked the group through live queries, showed them how to extract data from API and how to debug queries using the advanced search. He talked about the limitations of the APIs and provided tips and tricks for working with Scopus APIs.

Eric left the attendances with actionable and workable code and scripts to pull and retrieve data from Scopus APIs.

On the second day, we hosted a Data Stories and Visualization Panel, featuring Claudia von Vacano (D-Lab), Garret S. Christensen (BIDS and BITSS), Orianna DeMasi (Computer Science and BIDS), and Rita Lucarelli (Department of Near Eastern Studies). The talks and discussions centered upon how data is being used in creative and compelling ways to tell stories, in addition to rewards and challenges of supporting groundbreaking research when the underlying research data is restricted.

Claudia von Vacano, the Director of D-Lab, discussed the Online Hate Index (OHI), a joint initiative of the Anti-Defamation League’s (ADL) Center for Technology and Society that uses crowd-sourcing and machine learning to develop scalable detection of the growing amount of hate speech within social media. In its recently-completed initial phase, the project focused on training a model based on an unbiased dataset collected from Reddit. Claudia explained the process, from identifying the problem, defining hate speech, and establishing rules for human coding, through building, training, and deploying the machine learning model. Going forward, the project team plans to improve the accuracy of the model and extend it to include other social media platforms.

Next, Garret S. Christensen, BIDS and BITSS fellow, talked about his experience with research data. He started by providing a background about his research, then discussed the challenges he faced in collecting his research data. The main research questions that Garret investigated are: How are people responding to military deaths? Do large numbers of, or high-profile, deaths affect people’s decision to enlist in the military?

Garret discussed the challenges of obtaining and working with the Department of Defense data obtained through a Freedom of Information Act request for the purpose of researching war deaths and military recruitment. Despite all the challenges that Garret faced and the time he spent on getting the data, he succeeded in putting the data together into a public repository. Now the information on deaths in the US Military from January 1, 1990 to November 11, 2010 that was obtained through Freedom of Information Act request is available on dataverse. At the end, Garret showed that how deaths and recruits have a negative relationship.

Orianna DeMasi, a graduate student of Computer Science and BIDS Fellow, shared her story of working with human subjects data. The focus of Orianna’s research is on building tools to improve mental healthcare. Orianna framed her story about collecting and working with human subject data as a fairy tale story. She indicated that working with human data makes security and privacy essential. She has learned that it’s easy to get blocked “waiting for data” rather than advancing the project in parallel to collecting or accessing data. At the end, Orianna advised the attendees that “we need to keep our eyes on the big problems and data is only the start.”

Rita Lucarelli, Department of Near Eastern Studies discussed the Book of the Dead in 3D project, which shows how photogrammetry can help visualization and study of different sets of data within their own physical context. According to Rita, the “Book of the Dead in 3D” project aims in particular to create a database of “annotated” models of the ancient Egyptian coffins of the Hearst Museum, which is radically changing the scholarly approach and study of these inscribed objects, at the same time posing a challenge in relation to data sharing and the publication of the artifacts. Rita indicated that metadata is growing and digital data and digitization are challenging.

It was fascinating to hear about Egyptology and how to visualize 3D ancient objects!

We closed out LDW 2018 at UC Berkeley with a session about Research Data Management Planning and Publishing. In the session, Daniella Lowenberg (University of California Curation Center) started by discussing the reasons to manage, publish, and share research data on both practical and theoretical levels.

Daniella shared practical tips about why, where, and how to manage research data and prepare it for publishing. She discussed relevant data repositories that UC Berkeley and other entities offer. Daniela also illustrated how to make data reusable, and highlighted the importance of citing research data and how this maximizes the benefit of research.

At the end, Daniella presented a live demo on using Dash for publishing research data and encouraged UC Berkeley workshop participants to contact her with any question about data publishing. In a lively debate, researchers shared their experiences with Daniella about working with managing research data and highlighted what has worked and what has proved difficult.

We have received overwhelmingly positive feedback from the attendees. Attendees also expressed their interest in having similar workshops to understand the broader perspectives and skills needed to help researchers manage their data.

I would like to thank BIDS and the University Library for sponsoring the events.

—

Yasmin AlNoamany

Love Data Week 2018 at UC Berkeley

The University Library, Research IT, and Berkeley Institute for Data Science will host a series of events on February 12th-16th during the Love Data Week 2018. Love Data Week a nationwide campaign designed to raise awareness about data visualization, management, sharing, and preservation.

The University Library, Research IT, and Berkeley Institute for Data Science will host a series of events on February 12th-16th during the Love Data Week 2018. Love Data Week a nationwide campaign designed to raise awareness about data visualization, management, sharing, and preservation.

Please join us to learn about multiple data services that the campus provides and discover options for managing and publishing your data. Graduate students, researchers, librarians and data specialists are invited to attend these events to gain hands-on experience, learn about resources, and engage in discussion around researchers’ data needs at different stages in their research process.

To register for these events and find out more, please visit: http://guides.lib.berkeley.edu/ldw2018guide

Schedule:

Intro to Scopus APIs – Learn about working with APIs and how to use the Scopus APIs for text mining.

01:00 – 03:00 p.m., Tuesday, February 13, Doe Library, Room 190 (BIDS)

Refreshments will be provided.

Data stories and Visualization Panel – Learn how data is being used in creative and compelling ways to tell stories. Researchers across disciplines will talk about their successes and failures in dealing with data.

1:00 – 02:45 p.m., Wednesday, February 14, Doe Library, Room 190 (BIDS)

Refreshments will be provided.

Planning for & Publishing your Research Data – Learn why and how to manage and publish your research data as well as how to prepare a data management plan for your research project.

02:00 – 03:00 p.m., Thursday, February 15, Doe Library, Room 190 (BIDS)

Hope to see you there!

Great talks and fun at csv,conf,v3 and Carpentry Training

Day1 @CSVConference! This is the coolest conf I ever been to #csvconf pic.twitter.com/ao3poXMn81

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

On May 2 – 5 2017, I (Yasmin AlNoamany) was thrilled to attend the csv,conf,v3 2017 conference and the Software/Data Carpentry instructor training in Portland, Oregon, USA. It was a unique experience to attend and speak with many people who are passionate about data and open science.

The csv,conf,v3

The csv,conf is for data makers from academia, industry, journalism, government, and open source. We had amazing four keynotes by Mike Rostock, the creator of the D3.js (JavaScript library for visualization data), Angela Bassa, the Director of Data Science at iRobot, Heather Joseph, the Executive Director of SPARC, and Laurie Allen, the lead Digital Scholarship Group at the University of Pennsylvania Libraries. The conference had four parallel sessions and a series of workshops about data. Check out the full schedule from here.

.@ErinSBecker is talking @CSVConference about @datacarpentry & @swcarpentry workshops! #csvconf pic.twitter.com/beKOixMNrt

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

If you wanna change the culture, start early! @ProjectJupyter @openscience @CSVConference #csvconf pic.twitter.com/7OVMjMvVrc

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

"Speak out, step up, take actions" Great ending for an amazing keynote by @hjoseph @CSVConference #csvconf pic.twitter.com/O4Vogsfhe4

— Yasmina Anwar (@yasmina_anwar) May 2, 2017

Fascinated by @yasmina_anwar's research on using web archives to automatically create histories for the next generation. #csvconf pic.twitter.com/sbI359n5Bk

— V Ikeshoji-Orlati (@vikeshojiorlati) May 3, 2017

I presented on the second day about generating stories from archived data, which entitled “Using Web Archives to Enrich the Live Web Experience Through Storytelling”. Check out the slides of my talk below.

I demonstrated the steps of the proposed framework, the Dark and Stormy Archives (DSA), in which, we identify, evaluate, and select candidate Web pages from archived collections that summarize the holdings of these collections, arrange them in chronological order, and then visualize these pages using tools that users already are familiar with, such as Storify. For more information about this work, check out this post.

https://twitter.com/HamdanAzhar/status/859851223515582464

The csv,conf deserved to won the conference of the year prize for bringing the CommaLlama. The Alpaca brought much joy and happiness to all conference attendees. It was fascinating to be in csv,conf 2017 to meet and hear from passionate people from everywhere about data.

#commaLlama is in the chapel 😃! This is amazing! #csvconf @CSVConference pic.twitter.com/cnQJzQ9L3h

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

#commaLlama with @CLIRnews fellows @vikeshojiorlati and @yasmina_anwar @CSVConference #csvconf pic.twitter.com/Mpfooy9ccP

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

#Trump 😒 😡vs #Clinton😭💕 #emoji #emojis #electionsemojis #csvconf pic.twitter.com/yYoUzm158H

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

#Data needs to be findable, accessible, interoperable, reusable! @rchampieux #csvconf pic.twitter.com/1VIQzHSIh4

— Yasmina Anwar (@yasmina_anwar) May 3, 2017

Shout out for all @CSVConference organizers! We enjoyed all the talks, keynotes, #commaLlama #csvconf #data pic.twitter.com/BLjRCCRYdu

— Yasmina Anwar (@yasmina_anwar) May 4, 2017

After the conference, Max Odgen from the Dat Data Project gave us a great tour from the conference venue to South Portland. We had a great food from street food trucks at Portland, then we had a great time with adorable neighborhood cats!

https://twitter.com/yasmina_anwar/status/860017390968492032

Tha Carpentry Training

Congratulations, everyone! You did it! Portland 2017 cohort of @datacarpentry / @swcarpentry Instructors! @LibCarpentry #porttt pic.twitter.com/J3ceEzwOBQ

— Library Carpentry (@LibCarpentry) May 5, 2017

https://twitter.com/LibCarpentry/status/860571982479241216

The two days had a mix of lectures and hands-on exercises about learning philosophy and Carpentry teaching practices. It was a unique and fascinating experience to have. We had two energetic instructors, Tim Dennis and Belinda Weaver, who generated welcoming and collaborate environment for us. Check out the full schedule and lessons from here.

.@yasmina_anwar just convinced me to fall in love with RStudio from her live code teaching example at #porttt.

— Elaine Wong (@elthenerd) May 5, 2017

At the end, I would like to acknowledge the support I had from the California Digital Library and the committee of the csv,conf for giving me this amazing opportunity to attend and speak at the csv,conf and the Carpentry instructor training. I am looking forward to applying what I learned in upcoming Carpentry workshops at UC Berkeley.

–Yasmin

Great talks and tips in Love Your Data Week 2017

Opening out #LYD17 w/great discussion of #securing research data. Thanks to our experts speakers @UCBIDS @DH @DLabAtBerkeley @ucberkeleylib pic.twitter.com/DqvIKb9sDL

— Yasmina Anwar (@yasmina_anwar) February 14, 2017

This week, the University Library and the Research Data Management program were delighted to participate in the Love Your Data (LYD) Week campaign by hosting a series of workshops designed to help researchers, data specialists, and librarians to better address and plan for research data needs. The workshops covered issues related to managing, securing, publishing, and licensing data. Participants from many campus groups (e.g., LBNL, CSS-IT) were eager to continue the stimulating conversation around data management. Check out the full program and information about the presented topics.

Photographs by Yasmin AlNoamany for the University Library.

The first day of LYD week at UC Berkeley was kicked off by a discussion panel about Securing Research Data, featuring

Jon Stiles (D-Lab, Federal Statistical RDC), Jesse Rothstein (Public Policy and Economics, IRLE), Carl Mason (Demography). The discussion centered upon the rewards and challenges of supporting groundbreaking research when the underlying research data is sensitive or restricted. In a lively debate, various social science researchers detailed their experiences working with sensitive research data and highlighted what has worked and what has proved difficult.

At the end, Chris Hoffman, the Program Director of the Research Data Management program, described a campus-wide project about Securing Research Data. Hoffman said the goals of the project are to improve guidance for researchers, benchmark other institutions’ services, and assess the demand and make recommendations to campus. Hoffman asked the attendees for their input about the services that the campus provides.

On the second day, we hosted a workshop about the best practices for using Box and bDrive to manage documents, files and other digital assets by Rick Jaffe (Research IT) and Anna Sackmann (UC Berkeley Library). The workshop covered multiple issues about using Box and bDrive such as the key characteristics, and personal and collaborative use features and tools (including control permissions, special purpose accounts, pushing and retrieving files, and more). The workshop also covered the difference between the commercial and campus (enterprise) versions of Box and Drive. Check out the RDM Tools and Tips: Box and Drive presentation.

We closed out LYD Week 2017 at UC Berkeley with a workshop about Research Data Publishing and Licensing 101. In the workshop, Anna Sackmann and Rachael Samberg (UC Berkeley’s Scholarly Communication Officer) shared practical tips about why, where, and how to publish and license your research data. (You can also read Samberg & Sackmann’s related blog post about research data publishing and licensing.)

In the first part of the workshop, Anna Sackmann talked about reasons to publish and share research data on both practical and theoretical levels. She discussed relevant data repositories that UC Berkeley and other entities offer, and provided criteria for selecting a repository. Check out Anna Sackmann’s presentation about Data Publishing.

During the second part of the presentation, Rachael Samberg illustrated the importance of licensing data for reuse and how the agreements researchers enter into and copyright affects licensing rights and choices. She also distinguished between data attribution and licensing. Samberg mentioned that data licensing helps resolve ambiguity about permissions to use data sets and incentivizes others to reuse and cite data. At the end, Samberg explained how people can license their data and advised UC Berkeley workshop participants to contact her with any questions about data licensing.

Check out the slides from Rachael Samberg’s presentation about data licensing below.

The workshops received positive feedback from the attendees. Attendees also expressed their interest in having similar workshops to understand the broader perspectives and skills needed to help researchers manage their data.

—

Yasmin AlNoamany

Special thanks to Rachael Samberg for editing this post.

Love Your Data Week 2017

Love Your Data (LYD) Week is a nationwide campaign designed to raise awareness about research data management, sharing, and preservation. In UC Berkeley, the University Library and the Research Data Management program will host a set of events that will be held from February 13th-17th to encourage and teach researchers how to manage, secure, publish, and license their data. Presenters will describe groundbreaking research on sensitive or restricted data and explore services needed to unlock the research potential of restricted data.

Love Your Data (LYD) Week is a nationwide campaign designed to raise awareness about research data management, sharing, and preservation. In UC Berkeley, the University Library and the Research Data Management program will host a set of events that will be held from February 13th-17th to encourage and teach researchers how to manage, secure, publish, and license their data. Presenters will describe groundbreaking research on sensitive or restricted data and explore services needed to unlock the research potential of restricted data.

Graduate students, researchers, librarians and data specialists are invited to attend these events and learn multiple data services that the campus provides.

Schedule

To register for these events and find out more, please visit our LYD Week 2017 guide:

- Securing Research Data – Explore services needed to unlock the research potential of restricted data.

11:00 am-12:00 pm, Tuesday, February 14, Doe Library, Room 190 (BIDS)

For more background on the Securing Research Data project, please see this RIT News article. - RDM Tools & Tips: Box and Drive – Learn the best practices for using Box and bDrive to manage documents, files, and other digital assets.

10:30 am-11:45 am, Wednesday, February 15, Doe Library, Room 190 (BIDS)

Refreshments are provided by the UC Berkeley Library. - Research Data Publishing and Licensing – This workshop covers why and how to publish and license your research data.

11:00 am-12:00 pm, Thursday, February 16, Doe Library, Room 190 (BIDS)

The presenters will share practical tips, resources, and stories to help researchers at different stages in their research process.

Sponsored and organized by the UC Berkeley Library and the Research Data Management. Contact yasmin@berkeley.edu or quinnd@berkeley.edu with questions.

—–

Yasmin AlNoamany

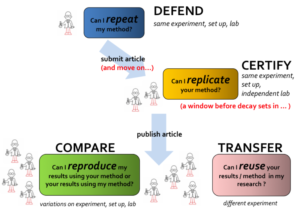

Could I re-research my first research?

Last week, one of my teammates, at Old Dominion University, contacted me and asked if she could apply some of the techniques I adopted in the first paper I published during my Ph.D. She asked about the data and any scripts I had used to pre-process the data and implement the analysis. I directed her to where the data was saved along with a detailed explanation of the structure of the directories. It took me awhile to remember where I had saved the data and the scripts I had written for the analysis. At the time, I did not know about data management and the best practices to document my research.

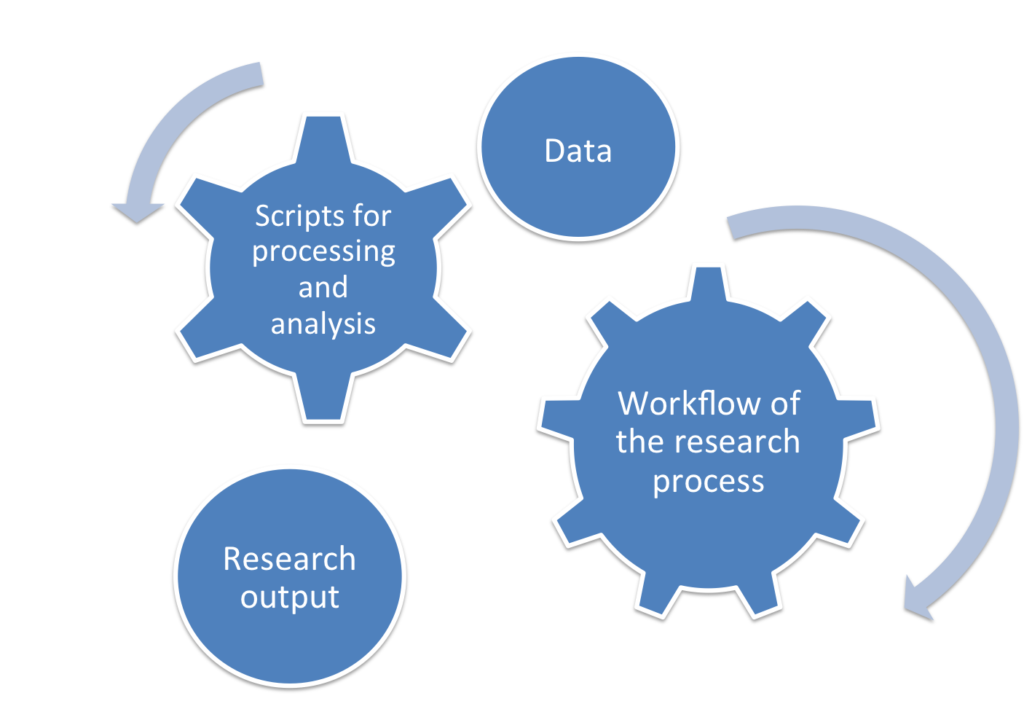

I shared the scripts I generated for pre-processing the data with my colleague, but the information I gave her did not cover all the details regarding my workflow. There were many steps I had done manually for producing the input and the output to and from the pre-processing scripts. Luckily I had generated a separate document that had the steps of the experiments I conducted to generate the graphs and tables in the paper. The document contained details of the research process in the paper along with a clear explanation for the input and the output of each step. When we submit a scientific paper, we get reviews back after a couple of months. That was why I documented everything I had done, so that I could easily regenerate any aspect of my paper if I needed to make any future updates.

Documenting the workflow and the data of my research paper during the active phase of the research saved me the trouble of trying to remember all the steps I had taken if I needed to make future updates to my research paper. Now my colleague has all the entities of my first research paper: the dataset, the output paper of my research, the scripts that generated this output, and the workflow of the research process (i.e., the steps that were required to produce this output). She can now repeat the pre-processing for the data using my code in a few minutes.

Funding agencies have data management planning and data sharing mandates. Although this is important to scientific endeavors and research transparency, following good practices in managing research data and documenting the workflow of the research process is just as important. Reproducing the research is not only about storing data. It is also about the best practices to organize this data and document the experimental steps so that the data can be easily re-used and the research can be reproduced. Documenting the directory structure of the data in a file and attaching this file to the experiment directory would have saved me a lot of time. Furthermore, having a clear guidance for the workflow and documentation on how the code was built and run is an important step to making the research reproducible.

Funding agencies have data management planning and data sharing mandates. Although this is important to scientific endeavors and research transparency, following good practices in managing research data and documenting the workflow of the research process is just as important. Reproducing the research is not only about storing data. It is also about the best practices to organize this data and document the experimental steps so that the data can be easily re-used and the research can be reproduced. Documenting the directory structure of the data in a file and attaching this file to the experiment directory would have saved me a lot of time. Furthermore, having a clear guidance for the workflow and documentation on how the code was built and run is an important step to making the research reproducible.

While I was working on my paper, I adopted multiple well known techniques and algorithms for pre-processing the data. Unfortunately, I could not find any source codes that implemented them so I had to write new scripts for old techniques and algorithms. To advance the scientific research, researchers should be able to efficiently build upon past research and it should not be difficult for them to apply the basic tenets of scientific methods. My teammate is not supposed to re-implement the algorithms and the techniques I adopted in my research paper. It is time to change the culture of scientific computing to sustain and ensure the integrity of reproducibility.

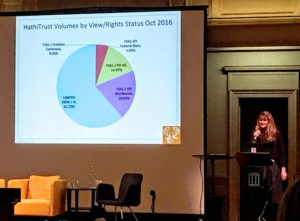

Library Leaders Forum 2016

On October 26-28, I had the honor of attending the Library Leaders Forum 2016, which was held at the Internet Archive (IA). This year’s meeting was geared towards envisioning the library of 2020. October 26th was also IA’s 20th anniversary. I joined my Web Science and Digital Libraries (WS-DL) Research Group in celebrating IA’s 20 years of preservation by contributing a blog post with my own personal story, which highlights a side of the importance of Web preservation for the Egyptian Revolution. More personal stories about Web archiving exist on WS-DL blog.

In the Great room at the Internet Archive Brewster Kahle, the Internet Archive’s Founder, kicked off the first day by welcoming the attendees. He began by highlighting the importance of openness, sharing, and collaboration for the next generation. During his speech he raised an important question, “How do we support datasets, the software that come with it, and open access materials?” According to Kahle, the advancement of digital libraries requires collaboration.

After Brewster Kahle’s brief introduction, Wendy Hanamura, the Internet Archive’s Director of Partnership, highlighted parts of the schedule and presented the rules of engagement and communication:

- The rule of 1 – Ask one question answer one question.

- The rule of n – If you are in a group of n people, speak 1/n of the time.

Before giving the microphone to the attendees for their introductions, Hanamura gave a piece of advice, “be honest and bold and take risks“. She then informed the audience that “The Golden Floppy” award shall be given to the attendees who would share bold or honest statements.

Next was our chance to get to know each other through self-introductions. We were supposed to talk about who we are, where we are from and finally, what we want from this meeting or from life itself. The challenge was to do this in four words.

After the introductions, Sylvain Belanger, the Director of Preservation of Library and Archives in Canada, talked about where his organization will be heading in 2020. He mentioned the physical side of the work they do in Canada to show the challenges they experience. They store, preserve, and circulate over 20 million books, 3 million maps, 90,000 films, and 500 sheets of music.

“We cannot do this alone!” Belanger exclaimed. He emphasized how important a partnership is to advance the library field. He mentioned that the Library and Archives in Canada is looking to enhance preservation and access as well as looking for partnerships. They would also like to introduce the idea of innovation into the mindset of their employees. According to Belanger, the Archives’ vision for the year 2020 includes consolidating their expertise as much as they can and also getting to know how do people do their work for digitization and Web archiving.

After the Belanger’s talk, we split up into groups of three to meet other people we didn’t know so that we could exchange knowledge about what we do and where we came from. Then the groups of two will join to form a group of six that will exchange their visions, challenges, and opportunities. Most of the attendees agreed on the need for growth and accessibility of digitized materials. Some of the challenges were funding, ego, power, culture, etc.

Our visions for 2020, challenges, opportunities – vision: growth & accessibility #libraryleaders2016 @internetarchive pic.twitter.com/ePkpKzvRGB

— Yasmina Anwar (@yasmina_anwar) October 27, 2016

Chris Edward, the Head of Digital Services at the Getty Research Institute, talked about what they are doing, where they are going, and the impact of their partnership with the IA. Edward mentioned that the uploads by the IA are harvested by HathiTrust and the Defense Logistics Agency (DLA). This allows them to distribute their materials. Their vision for 2020 is to continue working with the IA and expanding the Getty research portal, and digitize everything they have and make it available for everyone, anywhere, all the time. They also intend on automating metadata generation (OCR, image recognition, object recognition, etc.), making archival collections accessible, and doing 3D digitization of architectural models. They will then join forces with the International Image Interoperability Framework (IIIF) community to develop the capability to represent these objects. He also added that they want to help the people who do not have the ability to do it on their own.

After lunch, Wendy Hanamura walked us quickly through the Archive’s strategic plan for 2015-2020 and IA’s tools and projects. Some of these plans are:

- Next generation Wayback Machine

- Test pilot with Mozilla so they suggest archived pages for the 404

- Wikimedia link rots

- Building libraries together

- The 20 million books

- Table top scribe

- Open library and discovery tool

- Digitization supercenter

- Collaborative circulation system

- Television Archive — Political ads

- Software and emulation

- Proprietary code

- Scientific data and Journals – Sharing data

- Music — 78’s

“No book should be digitized twice!”, this is how Wendy Hanamura ended her talk.

Then we had a chance to put our hands on the new tools by the IA and by their partners through having multiple makers’ space stations. There were plenty of interesting projects, but I focused on the International Research Data Commons– by Karissa McKelvey and Max Ogden from the Dat Project. Dat is a grant-funded project, which introduces open source tools to manage, share, publish, browse, and download research datasets. Dat supports peer-to-peer distribution system, (e.g., BitTorrent). Ogden mentioned that their goal is to generate a tool for data management that is as easy as Dropbox and also has a versioning control system like GIT.

Then we had a chance to put our hands on the new tools by the IA and by their partners through having multiple makers’ space stations. There were plenty of interesting projects, but I focused on the International Research Data Commons– by Karissa McKelvey and Max Ogden from the Dat Project. Dat is a grant-funded project, which introduces open source tools to manage, share, publish, browse, and download research datasets. Dat supports peer-to-peer distribution system, (e.g., BitTorrent). Ogden mentioned that their goal is to generate a tool for data management that is as easy as Dropbox and also has a versioning control system like GIT.

After a break Jeffrey Mackie-Mason, the University Librarian of UC Berkeley, interviewed Brewster Kahle about the future of libraries and online knowledge. The discussion focused on many interesting issues, such as copyrights, digitization, prioritization of archiving materials, cost of preservation, avoiding duplication, accessibility and scale, IA’s plans to improve the Wayback Machine and many other important issues related to digitization and preservation. At the end of the interview, Kahle announced his white paper, which wrote entitled “Transforming Our Libraries into Digital Libraries”, and solicited feedback and suggestions from the audience.

"Dark Archive is one of the WORST ideas ever" @brewster_kahle @internetarchive #libraryleaders2016

— Yasmina Anwar (@yasmina_anwar) October 27, 2016

@waybackmachine is the calling card of the @internetarchive and it has been neglected until recently #libraryleaders2016

— Merrilee Proffitt (@MerrileeIAm) October 27, 2016

"Dark Archive is one of the WORST ideas ever" @brewster_kahle @internetarchive #libraryleaders2016

— Yasmina Anwar (@yasmina_anwar) October 27, 2016

@waybackmachine is the calling card of the @internetarchive and it has been neglected until recently #libraryleaders2016

— Merrilee Proffitt (@MerrileeIAm) October 27, 2016

https://twitter.com/tripofmice/status/791790807736946688

https://twitter.com/tripofmice/status/791786514497671168

Its not what we can get away with, but rather what is the role of libraries in #copyright issues -Brewster K. #libraryleaders2016

— Dr.EB 🇵🇸 (@LNBel) October 27, 2016

At the end of the day, we had an unusual and creative group photo by the great photographer Brad Shirakawa who climbed out on a narrow plank high above the crowd to take our picture.

On day two the first session I attended was a keynote address by Brewster Kahle about his vision for the Internet Archive’s Library of 2020, and what that might mean for all libraries.

"A library is engine for research!!" #libraryleaders2016 pic.twitter.com/D2du0L67T2

— Yasmina Anwar (@yasmina_anwar) October 28, 2016

Heather Christenson, the Program Officer for HathiTrust, talked about where HeathiTrust is heading in 2020. Christenson started by briefly explaining what is HathiTrust and why HathiTrust is important for libraries. Christenson said that HathiTrust’s primary mission is preserving for print and digital collections, improving discovery and access through offering text search and bibliographic data APIs, and generating a comprehensive collection of the US federal documents. Christensen mentioned that they did a survey about their membership and found that people want them to focus on books, videos, and text materials.

Our next session was a panel discussion about the Legal Strategies Practices for libraries by Michelle Wu, the Associate Dean for Library Services and Professor of Law at the Georgetown University Law Center, and Lila Bailey, the Internet Archive’s Outside Legal Counsel. Both speakers shared real-world examples and practices. They mentioned that the law has never been clearer and it has not been safer about digitizing, but the question is about access. They advised the libraries to know the practical steps before going to the institutional council. “Do your homework before you go. Show the usefulness of your work, and have a plan for why you will digitize, how you will distribute, and what you will do with the takedown request.”

After the panel Tom Rieger, the Manager of Digitization Services Section at the Library of Congress (LOC), discussed the 2020 vision for the Library of Congress. Reiger spoke of the LOC’s 2020 strategic plan. He mentioned that their primary mission is to serve the members of Congress, the people in the USA, and the researchers all over the world by providing access to collections and information that can assist them in decision making. To achieve their mission the LOC plans to collect and preserve the born digital materials and provide access to these materials, as well as providing services to people for accessing these materials. They will also migrate all the formats to an easily manageable system and will actively engage in collaboration with many different institutions to empowering the library system, and adapt new methods for fulfilling their mission.

In the evening, there were different workshops about tools and APIs that IA and their partners provided. I was interested in the RDM workshop by Max Ogden and Roger Macdonald. I wanted to explore the ways we can support and integrate this project into the UC Berkeley system. I gained more information about how the DAT project worked through live demo by Ogden. We also learned about the partnership between the Dat Project and the Internet Archive to start storing scientific data and journals at scale.

We then formed into small groups around different topics on our field to discuss what challenges we face and generate a roadmap for the future. I joined the “Long-Term Storage for Research Data Management” group to discuss what the challenges and visions of storing research data and what should libraries and archives do to make research data more useful. We started by introducing ourselves. We had Jefferson Bailey from the Internet Archive, Max Ogden, Karissa from the DAT project, Drew Winget from Stanford libraries, Polina Ilieva from the University of California San Francisco (UCSF), and myself, Yasmin AlNoamany.

Some of the issues and big-picture questions that were addressed during our meeting:

- The long-term storage for the data and what preservation means to researchers.

- What is the threshold for reproducibility?

- What do researchers think about preservation? Does it mean 5 years, 15 years, etc.?

- What is considered as a dataset? Harvard considers anything/any file that can be interpreted as a dataset.

- Do librarians have to understand the data to be able to preserve it?

- What is the difference between storage and preservation? Data can be stored, but long-term preservation needs metadata.

- Do we have to preserve everything? If we open it to the public to deposit their huge datasets, this may result in noise. For the huge datasets what should be preserved and what should not?

- Privacy and legal issues about the data.

Principles of solutions

- We need to teach researchers how to generate metadata and the metadata should be simple and standardized.

- Everything that is related to research reproducibility is important to be preserved.

- Assigning DOIs to datasets is important.

- Secondary research – taking two datasets and combine them to produce something new. In digital humanities, many researchers use old datasets.

- There is a need to fix the 404 links for datasets.

- There is should be an easy way to share data between different institutions.

- Archives should have rules for the metadata that describe the dataset the researchers share.

- The network should be neutral.

- Everyone should be able to host a data.

- Versioning is important.

Notes from the other Listening posts:

- LIBRARY 2020: Refining the vision, mapping the collection, and identifying the contributors

- WEB ARCHIVING: What are the opportunities for collaborative technology building?

- DIGITIZATION: Scanning services–develop a list of key improvements and innovations you desire

- DISCOVERY: Open Library– ideas for moving forward

At the end of the day, Polina Ilieva, the Head of Archives and Special Collections at UCSF, wrapped up the meeting by giving her insight and advice. She mentioned that for accomplishing their 2020 goals and vision, there is a need to collaborate and work together. Ilieva said that the collections should be available and accessible for researchers and everyone, but there is a challenge of assessing who is using these collections and how to quantify the benefits of making these collections available. She announced that they would donate all their microfilms to the Internet Archive! “Let us all work together to build a digital library, serve users, and attract consumers. Library is not only the engine for search, but also an engine for change, let us move forward!” This is how Ilieva ended her speech.

It was an amazing experience to hear about the 2020 vision of the libraries and be among all of the esteemed library leaders I have met. I returned with inspiration and enthusiasm for being a part of this mission and also ideas for collaboration to advance the library mission and serve more people.

–Yasmin AlNoamany

Connect Your Scholarship: Open Access Week 2016

Open Access connects your scholarship to the world, and for the week of Oct. 24-28, the UC Berkeley Library is highlighting these connections with five exciting workshops and panels.

What’s Open Access?

Open Access (OA) is the free, immediate, online availability of scholarship. Often, OA scholarship is also free of accompanying copyright or licensing reuse restrictions, promoting further innovation. OA removes barriers between readers and scholarly publications—connecting readers to information, and scholars to emerging scholarship and other authors with whom they can collaborate, or whose work they can test, innovate with, and expand upon.

Open Access Week @ UC Berkeley

OA Week 2016 is a global effort to bring attention to the connections that OA makes possible. At UC Berkeley, the University Library—with participation from partners like the D-Lab, California Digital Library, DH@Berkeley, and more—has put together engaging programming demonstrating OA’s connections in action. We hope to see you there.

Schedule

To register for these events and find out more, please visit our OA Week 2016 guide.

- Digital Humanities for Tomorrow

2-4 pm, Monday October 24, Doe Library 303 - Copyright and Your Dissertation

4-5 pm, Monday October 24, Sproul Hall 309 - Publishing Your Dissertation

2-3 pm, Tuesday October 25, Sproul Hall 309 - Increase and Track Your Scholarly Impact

2-3 pm, Thursday October 27, Sproul Hall 309 - Current Topics in Data Publishing

2-3 pm, Friday October 28, Doe Library 190

You can also talk to a Library expert from 11 a.m. – 1 p.m. on Oct. 24-28 at:

- North Gate Hall (Mon., Tue.)

- Kroeber Hall (Wed.–Fri.)

Event attendance and table visits earn raffle tickets for a prize drawing on October 28!

Sponsored by the UC Berkeley Library, and organized by the Library’s Scholarly Communication Expertise Group. Contact Library Scholarly Communication Officer, Rachael Samberg (rsamberg@berkeley.edu), with questions.

News: SPSS software now available to all faculty, students, & staff

Excuse the cross-posting, but I wanted to spread the word that the Campus has purchased an enterprise site license for SPSS. It can be downloaded on your campus and personal computer.

To request a license key from Campus to download SPSS, go to https://software.berkeley.edu/spss and authenticate with your CalNet ID and passphrase.

If you are only using SPSS infrequently, you may want to access it instead through the UCB Citrix program. Instructions for that can be found in the Data Lab’s Using Research Tools via Citrix guide.

Resource guide for data services and resources at UC Berkeley

Our Data and Digital Scholarship Expertise Group is a cross-disciplinary professional learning community within The Library. This group provides guidance, informs policies, organizes instructional events and resources, serves as a locus for campus partnerships, and its members develop expertise to serve as resource within their division or unit. The expertise group has developed a guide to data services and resources within the Library and across campus.